Publications

Working documents

-

-

Cross or Nah? LLMs Get in the mindset of a pedestrian in front of automated car with an eHMI

Alam, M. S., Bazilinskyy, P.

Submitted for publication.

ABSTRACT BIBThis study examines the effectiveness of using large language model-based personas to evaluate external Human-Machine Interfaces (eHMIs) in automated vehicles. 13 different models namely BakLLaVA, ChatGPT-4o, DeepSeek-VL2, Gemma 3: 12B, Gemma 3: 27B, Granite Vision 3.2, LLaMA 3.2 Vision, LLaVA-13B, LLAVA-34B, LLaVA-LLaMA-3, LLaVA-Phi3, MiniCPM-V, and Moondream were used to simulate pedestrian perspectives. Models assessed vehicle images with eHMI, assigning scores from 0 (completely unwilling) to 100 (fully confident) regarding crossing decisions. Each model was run 15 times across the full set of images, both with and without prior conversational context. The resulting confidence scores were then compared with crowdsourced human ratings. The findings indicate Gemma3: 27B performed better without chat history (r = 0.85), while ChatGPT-4o was superior when the historical context was included (r = 0.81). In contrast, DeepSeek-VL2 and BakLLaVA gave similar scores regardless of context, while LLaVA-LLaMA-3, LLaVA-Phi3, LLaVA-13B, and Moondream produced only limited-range outputs in both cases.

-

-

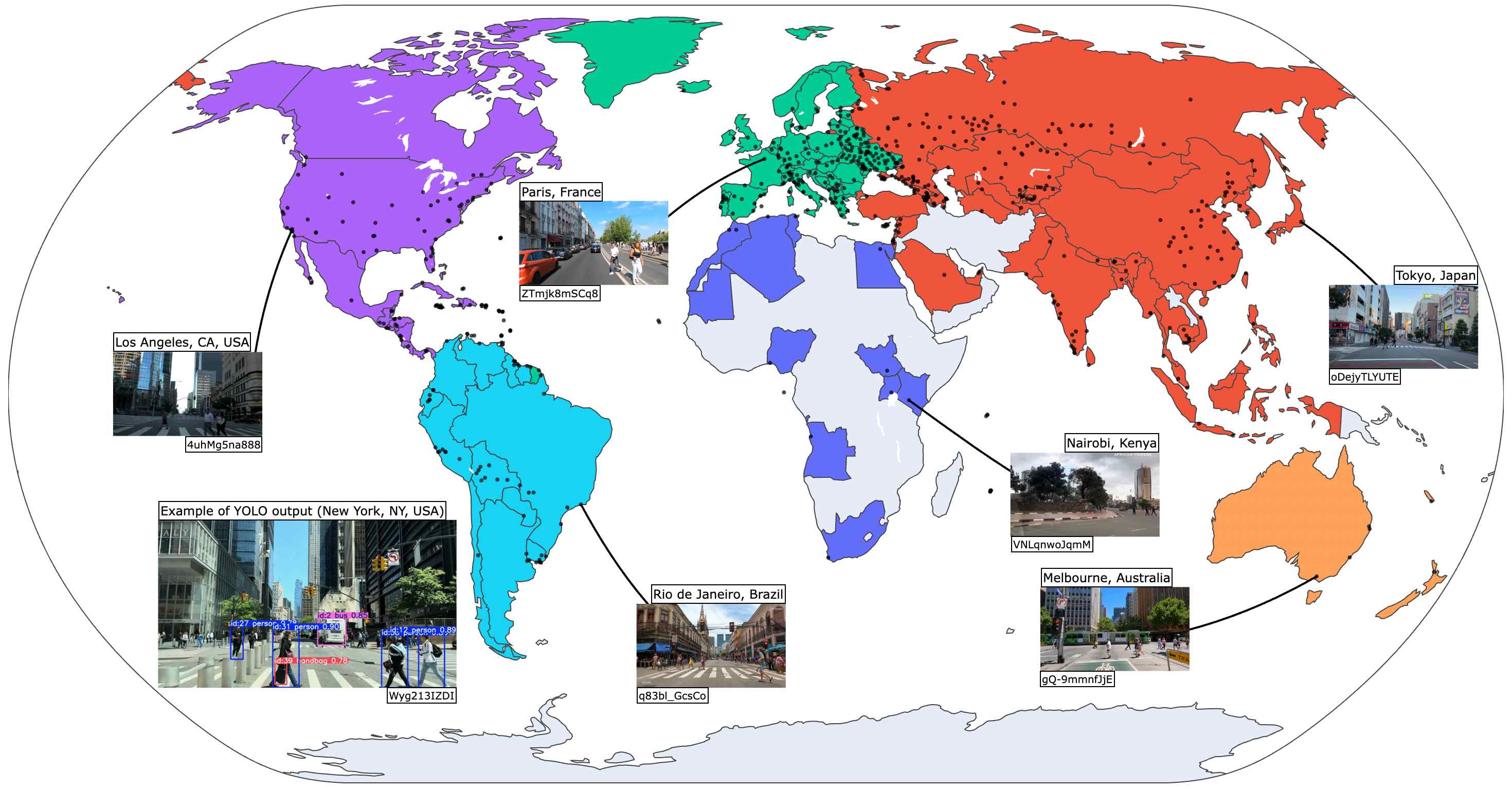

Understanding global pedestrian behaviour in 565 cities with dashcam videos on YouTube

Alam, Md. S., Martens, M. H., Bazilinska, O., Bazilinskyy, P.

Submitted for publication.

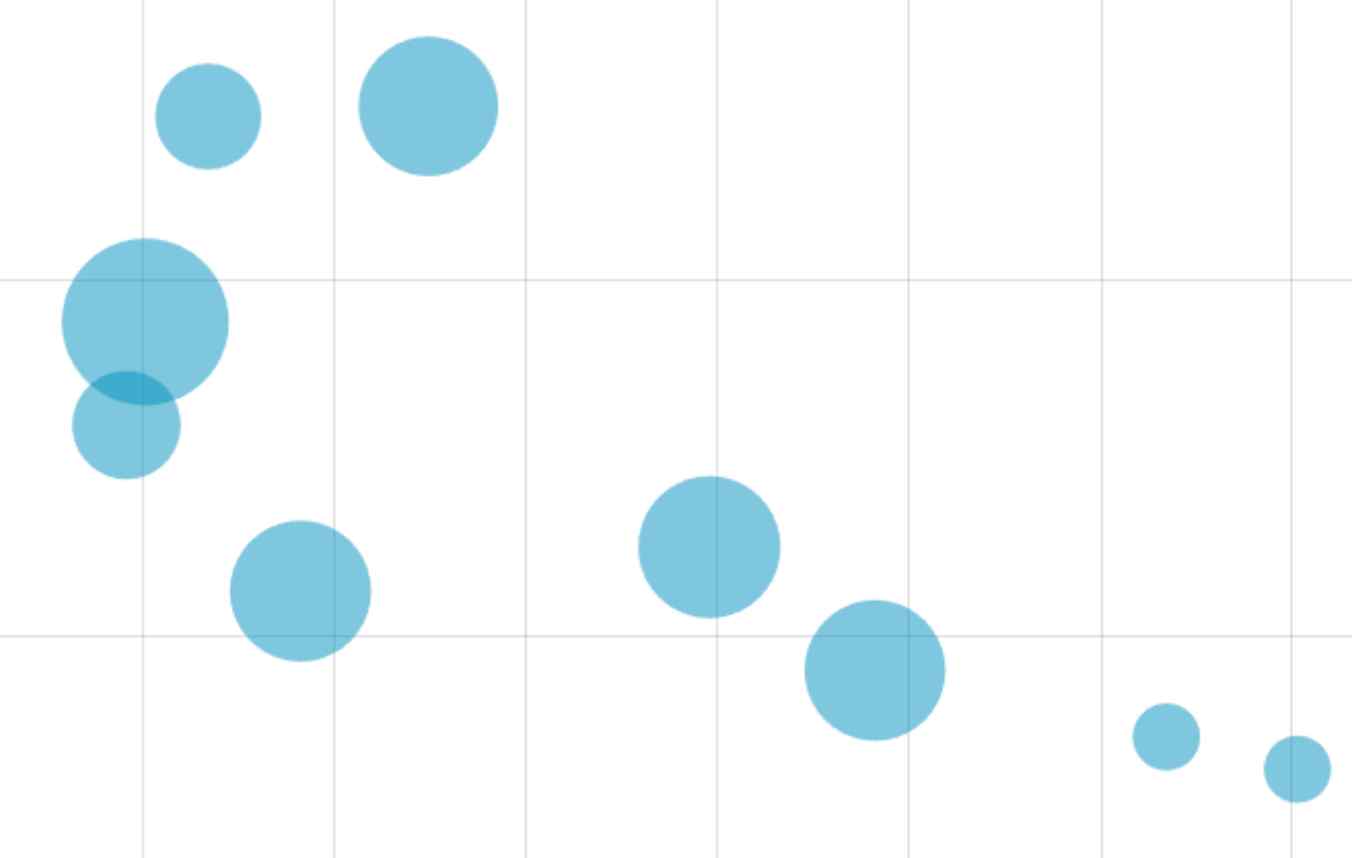

ABSTRACT BIBThe interactions between future cars and pedestrians should be designed to be understandable and safe worldwide. Although previous research has studied vehicle-pedestrian interactions within specific cities or countries, this study offers a more scalable and robust approach by examining pedestrian behaviour worldwide. We present a dataset, Pedestrians in YouTube (PYT), which includes 1562.80 hours of YouTube day and night dashcam footage from 565 cities in 104 countries. The included videos feature continuous urban driving, are at least 10 min long, feature no atypical events and represent everyday conditions, and are from cities with a minimum of 20,000 population. We detected pedestrian movements, focussing on the speed and the pedestrian crossing decision time during road crossings based on the bounding boxes given by YOLOv11x. The results revealed statistically significant variations in pedestrian behaviour influenced by socioeconomic and environmental factors such as Gross Metropolitan Product, traffic-related mortality, Gini coefficient, traffic index, and literacy. The dataset is publicly available to encourage further research on global pedestrian behaviour.

-

-

Exploring Veo 3's capabilities for generating urban traffic scenes in 76 cities worldwide

Alam, M. S., Wang, Z., Zhang, L., Bazilinskyy, P.

Submitted for publication.

ABSTRACT BIBThis study explores the potential of Google Veo 3, a generative video model, to synthesise 8-second dashcam-style urban traffic scenes solely based on text prompts in 76 cities across six continents. YOLOv11x was used to count facts like the number of road users, traffic lights, and stop signs, revealing variations across cities: Karachi had the most objects detected (79), while Muscat had only four cars. Audio analysis using dBFS showed that Montevideo was the loudest, while Copenhagen was the loudest. Through a qualitative visual analysis, the authors assessed and confirmed the perceived authenticity of most traffic scenes and highlighted AI errors, including the inability to handle non-English languages in these videos. Moreover, we compared 10 synthetic videos of New York City and Kampala, each, and verified that Veo 3 is consistent. To summarise, Veo 3 is capable of synthesising authentic, logical traffic scenes worldwide; nevertheless, it still poses non-negligible errors.

-

-

Pedestrian planet: What 1,609 hours of YouTube driving from 133 countries teaches us about the world

Alam, M. S., Martens, M. H., Bazilinskyy, P.

Submitted for publication.

ABSTRACT BIBPedestrian crossing behaviour varies globally. This study analyses dashcam footage from the PYT dataset, covering 133 countries, to examine decision time to cross, crossing speed, and contextual variables, including detected vehicles, traffic mortality, GDP, and Gini. Bulgaria had the longest decision time (10.50 s), while San Marino exhibited the fastest crossing speed (1.14 m/s). A global negative correlation between speed and decision time (r = -0.54) suggests that more cautious or uncertain pedestrians cross more slowly. Regional differences reveal stronger inverse correlations in Europe and North America, likely due to varying infrastructure, regulation, and cultures. Pedestrian decision time is positively correlated with the presence of other road users, especially bicycles (r = 0.35). Similar crossing times in countries with different infrastructures, such as Belgium and India, underscore the complex interaction between infrastructure and behavioural adaptation. These findings emphasise the importance of culturally aware road design and the development of adaptive interfaces for vehicles.

-

-

Vibe coding in practice: Building a driving simulator without expert programming skills

Fortes-Ferreira, M., Alam, M. S., Bazilinskyy, P.

Submitted for publication.

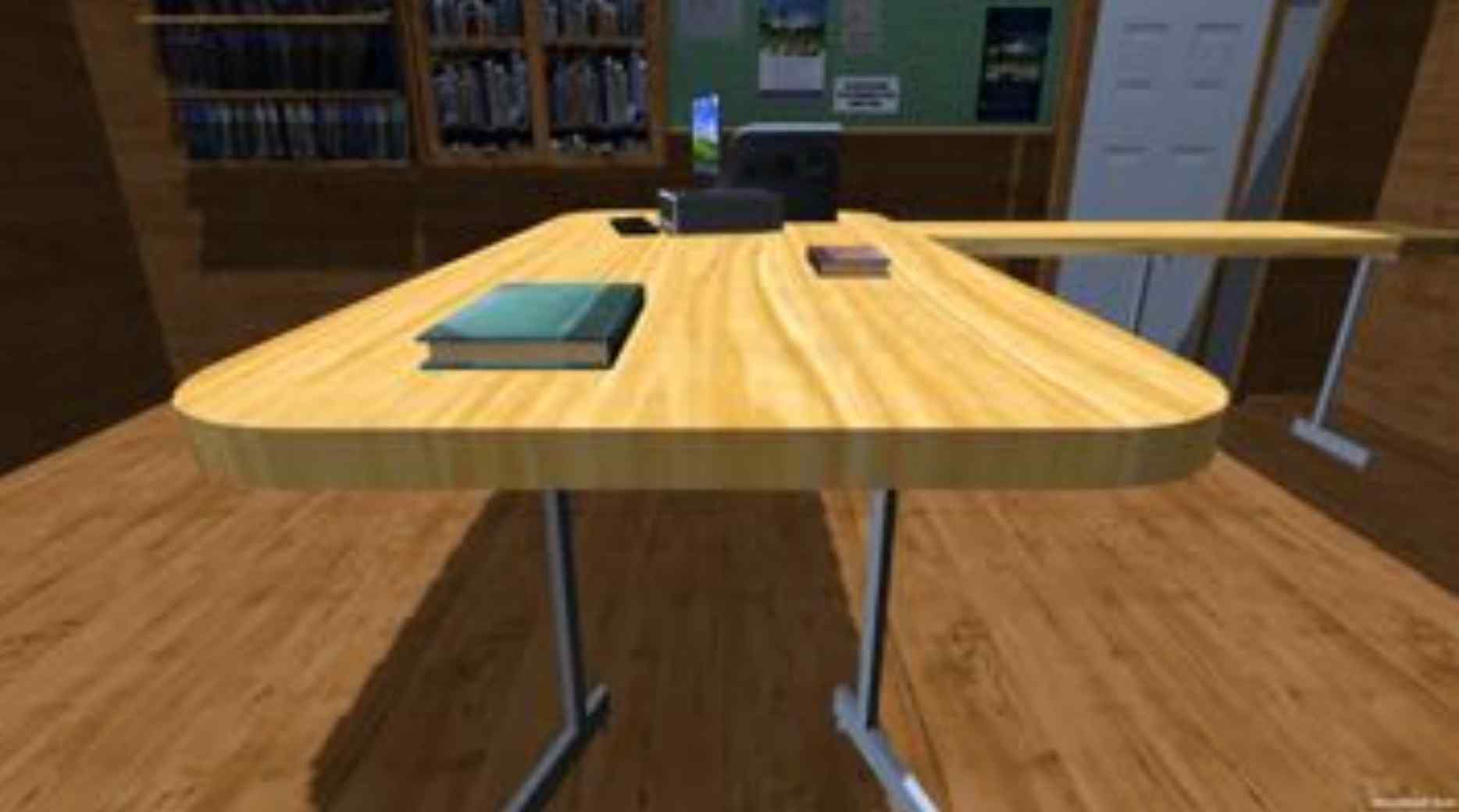

ABSTRACT BIBThe emergence of Large Language Models has introduced new opportunities in software development, particularly through a revolutionary paradigm known as vibe coding or 'coding by vibes,' in which developers express their software ideas in natural language and AI generates the code. This exploratory case study investigated the potential of vibe coding to support non-expert programmers. A participant without coding experience attempted to create a 3D driving simulator using the Cursor platform and Three.js. The iterative prompting process improved the simulation's functionality and visual quality. The results indicated that LLM can reduce barriers to creative development and expand access to computational tools. However, challenges remain: prompts often required refinements, output code can be logically flawed, and debugging demanded a foundational understanding of programming concepts. These findings highlight that while vibe coding increases accessibility, it does not completely eliminate the need for technical reasoning and understanding prompt engineering.

-

-

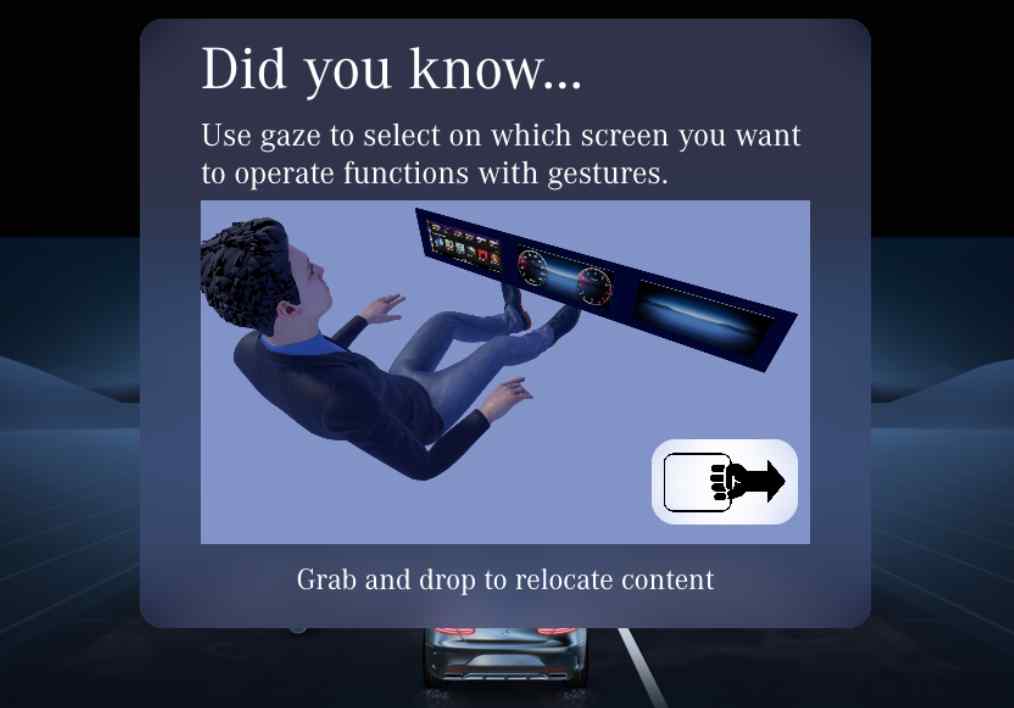

Teaching multimodal interaction in cars to first-time users

Marinissen, T., Glimmann, J., Bazilinskyy, P.

Submitted for publication.

ABSTRACT BIBThis study explores three variations of a proactive method to teach multimodal gaze and gesture interactions to first-time users in the scenario of an SAE level 5 automated vehicle. The three variations differed in size, placement on the screen, and whether active user input was required to receive additional information. The results of a user study involving the gesture control prototype in a driving simulator (N = 30) show that the greatest variation was more effective in teaching, caused by significant differences in visibility ratings (p < 0.001), size (p < 0.001) and duration (p = 0.001) of the pop-ups. The results show no correlation between the measured effectiveness and the preference for a specific variation. Across all variations, participants are positive toward receiving proactive teaching from their car to learn new features. We conclude that proactively teaching users novel interaction methods has the potential to improve the user experience in future vehicles.

-

-

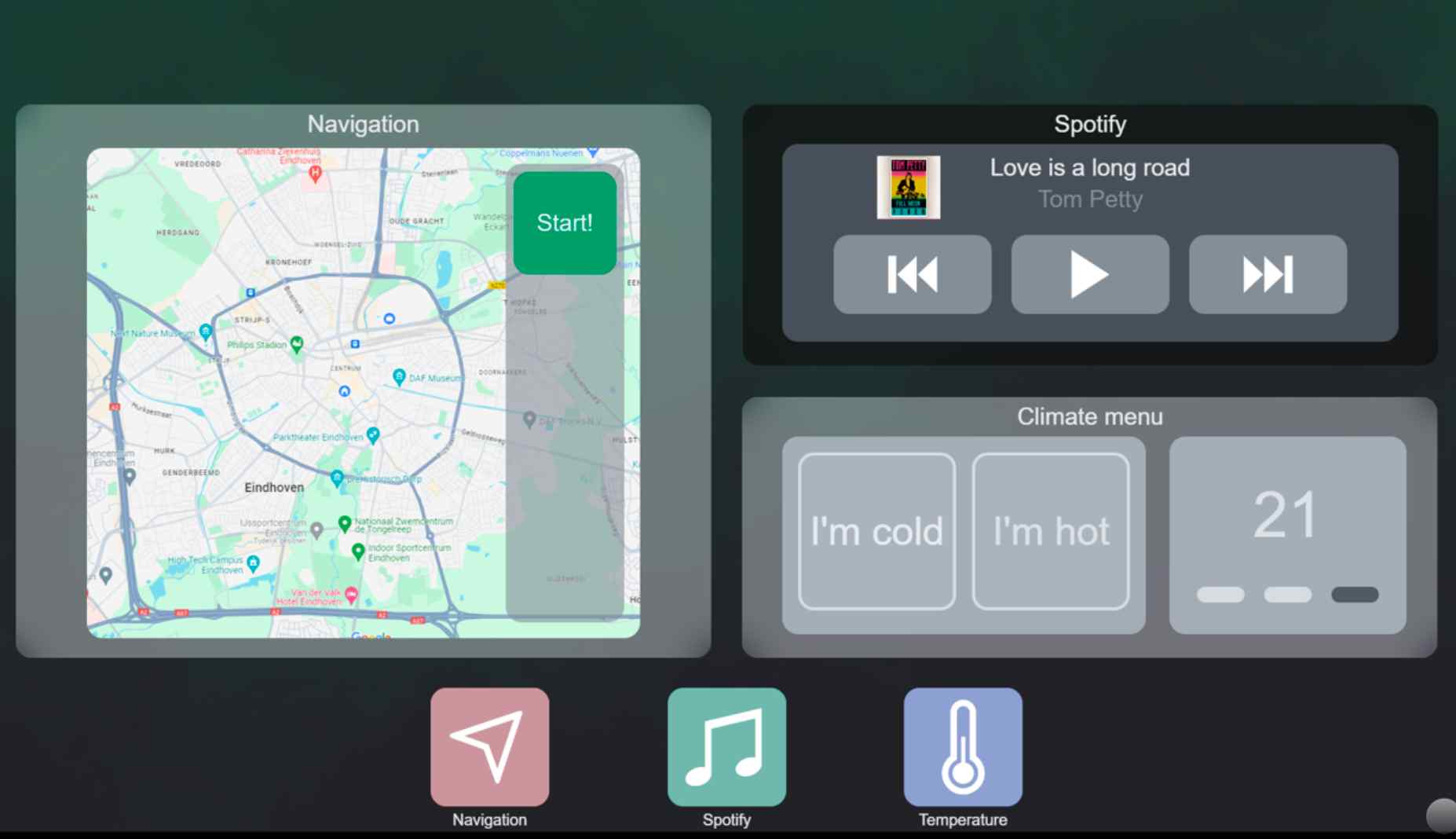

Visual feedback for in-car voice assistants

Marinissen, T., Bazilinskyy, P.

Submitted for publication.

ABSTRACT BIBThis study presents a voice-operated automotive user interface (UI) with ambient color feedback, designed to enhance driver interaction and safety by providing more noticeable and aesthetically pleasing visual cues through peripheral vision. An online survey (N = 151) showed a strong preference for Android Auto and Apple CarPlay over the UI of the car manufacturers, with users favoring consistency between the UI of their car and the digital ecosystem of their phone. A user study (N = 24) compared ambient visual feedback with conventional and no feedback, with 18 participants preferring the ambient method, which significantly improved visibility and assistance value. These findings suggest that ambient visual feedback enhances automotive UIs.

-

-

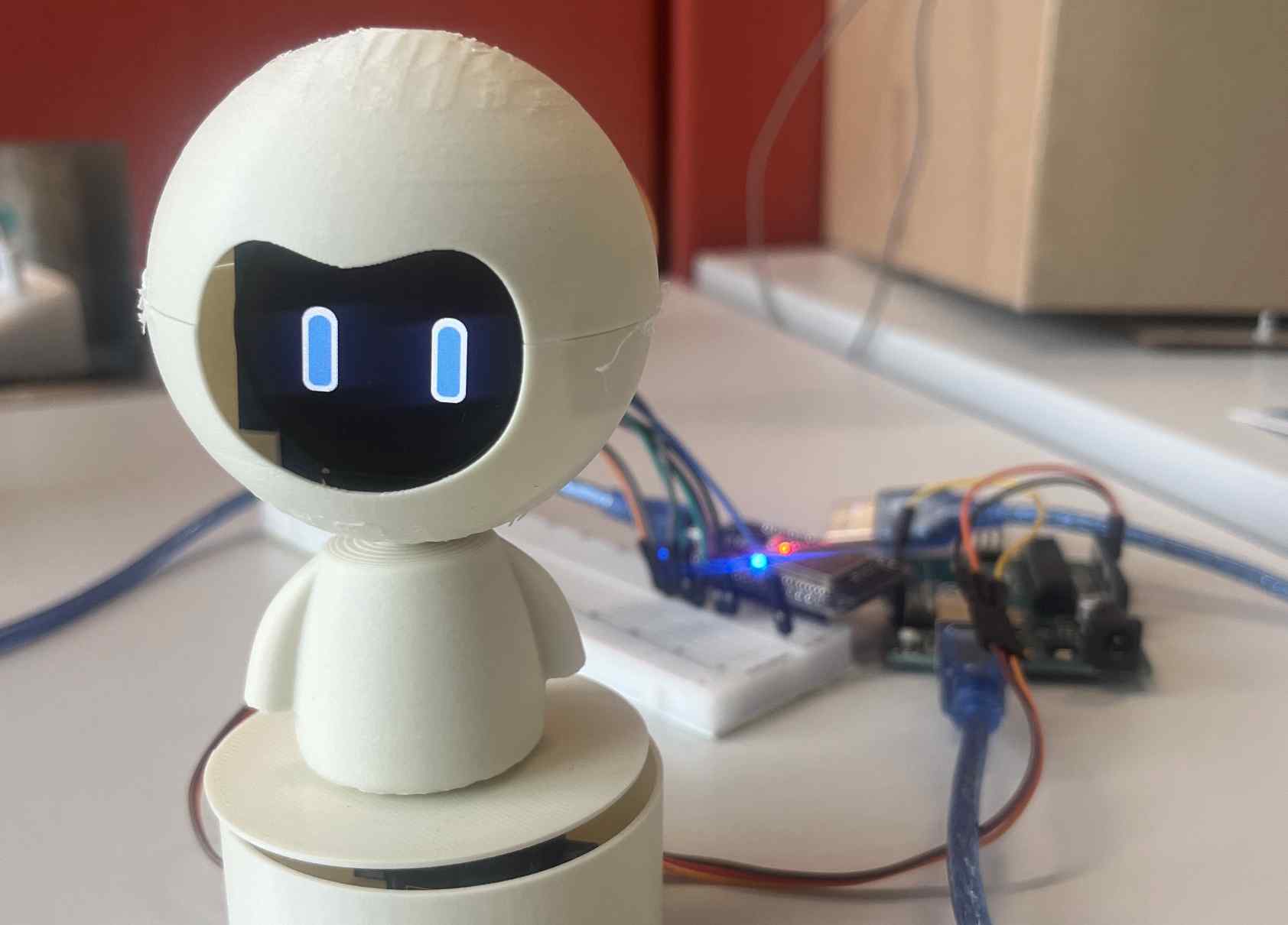

Robot-like in-vehicle agent for a level 3 automated vehicle with emotions

Zeng, X., Alam, M. S., Bazilinskyy, P.

Submitted for publication.

ABSTRACT BIBIn-vehicle agents (IVAs) have emerged as a transformative innovation for intelligent transportation systems. This paper presents the development and evaluation of a robot-like IVA prototype with emotional feedback capabilities for SAE Level 3 automated vehicles. A user study assessed emotional interactions between drivers and IVA. The results showed that emotional feedback and driver working status did not have a significant effect on average workload or acceptance (usefulness and satisfaction). However, emotional feedback influenced physical and temporal demands, and its interaction with working status significantly affected the overall workload. Voice communication remained the main interaction mode, especially when drivers were engaged in other tasks. The study highlighted the challenges of accurately detecting emotions through facial recognition in automated driving scenarios, emphasised the need to consider physical conditions such as fatigue and stress, and insight into the participants' perspectives towards the IVA robot.

-

-

Robot-like in-vehicle agent for a level 3 automated vehicle

Zeng, X., Alam, M. S., Bazilinskyy, P.

Submitted for publication.

ABSTRACT BIBWith the rapid development of automotive technology and artificial intelligence, in-vehicle agents have great potential to solve the challenges of explaining the status of the system and the intentions of an automated vehicle. A robot-like in-vehicle agent was designed and developed to explore the in-vehicle agent communicating through gestures and facial expressions with a driver in a SAE Level 3 automated vehicle. An experiment with 12 participants was conducted to evaluate the prototype. The results showed that both interactions of facial expressions and gestures can reduce workload and increase usefulness and satisfaction. However, gestures seem to be more functional and preferred by the driver while facial expressions seem to be more emotional and preferred by passengers. Furthermore, gestures are easier to notice but difficult to understand independently, and facial expressions are hard to notice but more attractive.

2025

-

-

Deep learning approach for realistic traffic video changes across lighting and weather conditions

Alam, M. S., Parmar, S. H., Martens, M. H., Bazilinskyy, P.

Proceedings of International Conference on Information and Computer Technologies (ICICT). Hilo, HI, USA (2025)

ABSTRACT BIB

Recent advances in GAN-based architectures have led to innovative methods for image transformation. The lack of diversity of environmental factors, such as lighting conditions and seasons in public data, prevents researchers from effectively studying the differences in the behaviour of road users under varying conditions. This study introduces a deep learning pipeline that combines CycleGAN-turbo and Real-ESRGAN to improve video transformations of traffic scenes. Evaluated using dashcam videos from Los Angeles, London, and Hong Kong, our pipeline demonstrates a notable improvement in T-SIMM for temporal consistency during night-to-day transformations, achieving a 7.97% increase for Hong Kong, 7.35% for Los Angeles, and 3.41% for London compared to CycleGAN-turbo. PSNR and VPQ scores are comparable, but the pipeline performs better in DINO structure similarity and KL divergence, with up to 153.49% better structural fidelity in Hong Kong compared to Pix2Pix and 107.32% better compared to ToDayGAN. This approach demonstrates better realism and temporal coherence in day-to-night, night-to-day, and clear-to-rainy transitions.

-

-

Generating realistic traffic scenarios: A deep learning approach using generative adversarial networks (GANs)

Alam, M. S., Martens, M. H., Bazilinskyy, P.

Proceedings of International Conference on Human Interaction & Emerging Technologies: Artificial Intelligence & Future Applications (IHIET-AI). Malaga, Spain (2025)

ABSTRACT BIB

Traffic simulations are crucial for testing systems and human behaviour in transportation research. This study investigates the potential efficacy of Unsupervised Recycle Generative Adversarial Networks (Recycle–GANs) in generating realistic traffic videos by transforming daytime scenes into nighttime environments and from night to day. By leveraging Unsupervised Recycle-GANs, we bridge the gap between data availability during day and night traffic scenarios, enhancing the robustness and applicability of deep learning algorithms for real-world applications. The generated transition videos were evaluated by 15 participants who rated their realism on a scale of 1 to 10, achieving a mean score of 7.21. Two persons identified the videos as deep-fake generated without pointing out what was fake in the video; they did mention that the traffic was generated. Furthermore, GPT-4V was provided with six frames from daytime and six from nighttime generated videos and queried whether the scenes were artificially created based on lightning, shadow behaviour, perspective, scale, texture, detail and presence of edge artefacts. The analysis of GPT-4V output did not reveal evidence of artificial manipulation, which supports the credibility and authenticity of the generated scenes.

-

-

Pedestrian crossing behaviour in front of electric vehicles emitting synthetic sounds: A virtual reality experiment

Bazilinskyy, P., Alam, M. S., Merino-Martınez, R.

Proceedings of 54th International Congress & Exposition on Noise Control Engineering (INTER-NOISE). São Paulo, Brazil (2025)

ABSTRACT BIB

The increasing adoption of electric vehicles (EVs), which operate more quietly than internal combustion engine vehicles, raises concerns about their detectability, particularly for visually impaired road users. Regulations mandate exterior sound signals for EVs, ensuring minimum sound pressure levels at low speeds. However, these signals are often used in already noisy urban environments, creating a challenge: enhancing detectability without adding excessive noise pollution. This study explores the use of synthetic exterior sounds that balance high noticeability with low annoyance. An audiovisual experiment was conducted with 20 participants in 15 virtual reality scenarios featuring an EV passing in front of them. Different sound signals, including pure, intermittent, and complex tones at varying frequencies, were tested alongside two baseline cases (a diesel engine and tyre noise alone, i.e., no synthetic sound added). Participants rated sounds for annoyance, noticeability, and informativeness using 11-point ICBEN scales. Trigger measurements provided additional insights into their willingness to cross in front of the EV. The results highlight optimal sound characteristics for EVs, offering guidance on improving pedestrian safety while minimising noise pollution. By refining exterior sound design, this research contributes to the development of effective and user-friendly EV sound standards, ensuring safer and more inclusive urban environments.

-

-

Psychoacoustic assessment of synthetic sounds for electric vehicles in a virtual reality experiment

Bazilinskyy, P., Alam, M. S., Merino-Martınez, R.

Proceedings of 11th Convention of the European Acoustics Association (Euronoise). Malaga, Spain (2025)

ABSTRACT BIB

The growing adoption of electric vehicles, known for their quieter operation compared to internal combustion engine vehicles, raises concerns about their detectability, particularly for vulnerable road users. To address this, regulations mandate the inclusion of exterior sound signals for electric vehicles, specifying minimum sound pressure levels at low speeds. These synthetic exterior sounds are often used in noisy urban environments, creating the challenge of enhancing detectability without introducing excessive noise annoyance. This study investigates the design of synthetic exterior sound signals that balance high noticeability with low annoyance. An audiovisual experiment with 14 participants was conducted using 15 virtual reality scenarios featuring a passing car. The scenarios included various sound signals, such as pure, intermittent, and complex tones at different frequencies. Two baseline cases, a diesel engine and only tyre noise, were also tested. Participants rated sounds for annoyance, noticeability, and informativeness using 11-point ICBEN scales. The findings highlight how psychoacoustic sound quality metrics predict annoyance ratings better than conventional sound metrics, providing insight into optimising sound design for electric vehicles. By improving pedestrian safety while minimising noise pollution, this research supports the development of effective and user-friendly exterior sound standards for electric vehicles.

-

-

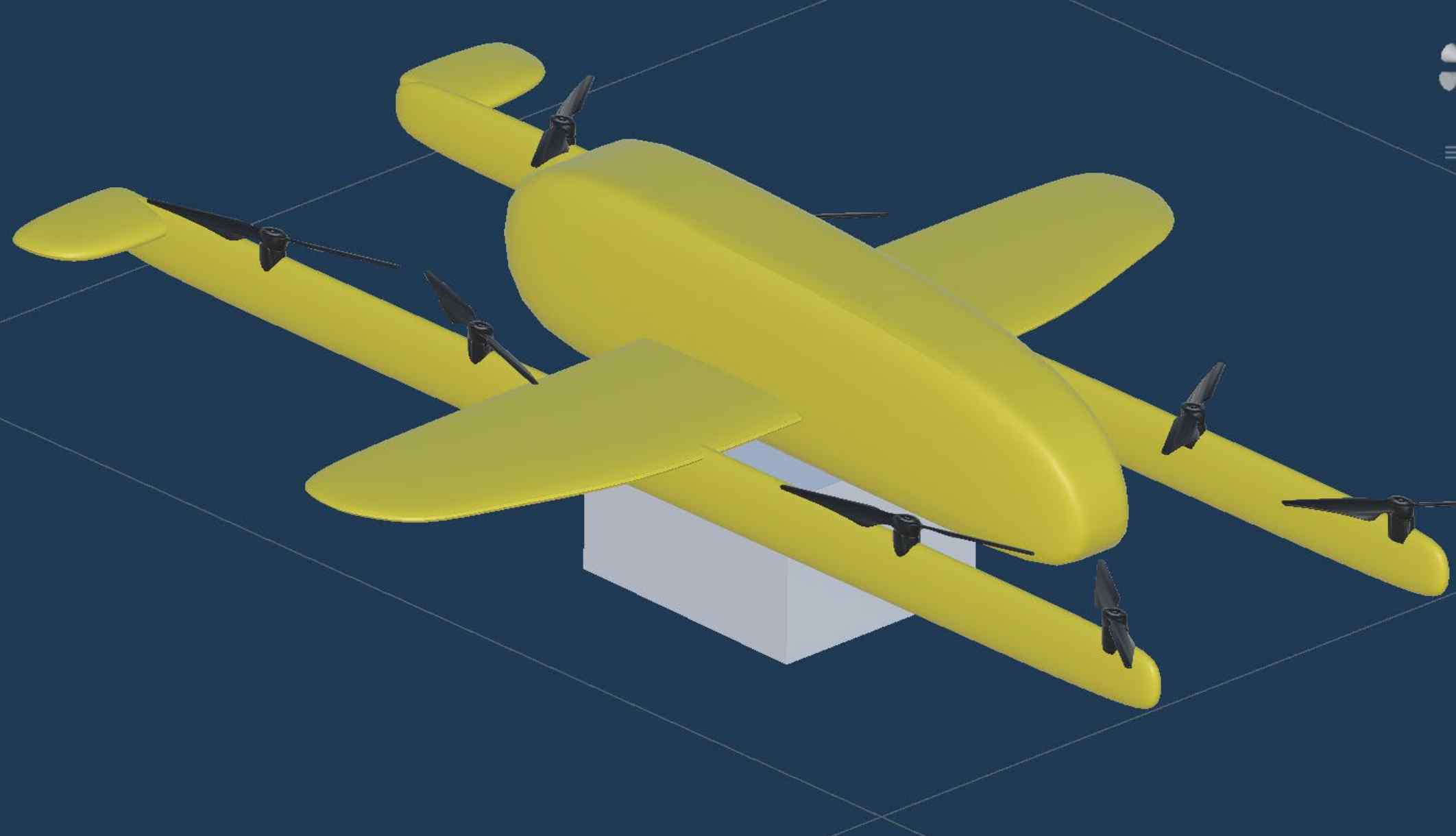

Behavioural effects of a delivery drone on feelings of uncertainty: A virtual reality experiment

Lingam, S. N., Petermeijer, S. M., Torre, I., Bazilinskyy, P., Ljungblad, S., Martens, M. H.

ACM Transactions on Human-Robot Interaction (2025)

ABSTRACT BIB

The use of drones is expected to increase for delivering groceries or medical equipment to individuals. Understanding how people perceive drone behavior, specifically in terms of approach trajectories and delivery methods, and identifying factors that induce feelings of uncertainty is crucial for perceived safety and trust. This virtual reality experiment investigated the impact of drone approach trajectories and delivery methods on feelings of uncertainty. Forty-five participants observed a drone approaching in an orthogonal or a curved path and either, delivering packages by landing or using a cable while hovering above eye level. We found that participants felt uncertain and unsafe, especially when looking up at drones approaching with orthogonal paths. Curved paths led to lower feelings of uncertainty, with comments such as being more natural, trustful, and safe. Feelings of uncertainty arose while landing on the ground due to altitude changes and potential collision concerns. Using a cable instead of actually landing for delivery reduced feelings of uncertainty and increased trust. The study recommends drones avoid hovering near humans, especially after landing. Furthermore, the study suggests exploring design solutions, including design aesthetics and human-machine interfaces, that clearly convey drone intentions to help reduce feelings of uncertainty.

-

-

Enhancing cyclist safety in the EU: A study on lateral overtaking distance across seven scenarios using lab and crowdsourced methods

Sapienza, G., Bazilinskyy, P.

Adjunct Proceedings of the 16th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutoUI). Brisbane, QLD, Australia (2025)

ABSTRACT BIB

Cyclists face significant risks from vehicles that overtake too closely. Through crowdsourcing (N = 200) and driving simulator (N = 20) experiments, this study examines driver behaviour in seven scenarios: laser projection, road sign, road marking, car projection, centre line and side line markings (baseline), cycle lane and no road markings. Crowdsourced participants consistently underestimated overtaking distances, particularly at wider gaps, despite feeling safer with greater distances. The simulation results showed that drivers maintained an average passing distance of 3.4 m when not constrained by traffic, exceeding the 1.5 m law of the European Union. However, interventions varied in effectiveness: while laser projection was preferred, it did not significantly increase passing distance. In contrast, a dedicated cycle lane and a solid centreline led to the greatest improvements. These findings highlight the discrepancies between perceived and actual safety and provide insight for policy interventions to enhance cyclist protection in the EU.

2024

-

-

From A to B with ease: User-centric interfaces for shuttle buses

Alam, M. S., Subramanian, T., Remlinger, W., Martens, M. H., Bazilinskyy, P.

Adjunct Proceedings of the 16th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutoUI). Stanford, CA, USA (2024)

ABSTRACT BIB

User interfaces are crucial for easy travel. To understand user preferences for travel information during automated shuttle rides, we conducted an online survey with 51 participants from 8 countries. The survey focused on the information passengers wish to access and their preferences for using mobile, private, and public screens during boarding and travelling on the bus. It also gathered opinions on the usage of Near-Field Communication (NFC) for shuttle bus confirmation and viewing assistance to help passengers stand precisely where the shuttle will arrive, overcoming navigation and language barriers. Results showed that 72.6% of participants indicated a need for NFC and 82.4% for viewing assistance. There was a strong correlation between preferences for shuttle bus schedules, route details (r=0.55), and next-stop information (r=0.57) on mobile screens, suggesting that passengers who value one type of information are likely to value related kinds too.

-

-

It is not always just one road user: Workshop on multi-agent automotive research

Bazilinskyy, P., Ebel, P., Walker, F., Dey, D., Tran, T.

Adjunct Proceedings of the 16th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutoUI). Stanford, CA, USA (2024)

ABSTRACT BIB

In the future, roads will host a complex mix of automated and manually operated vehicles, along with vulnerable road users. However, most automotive user interfaces and human factors research focus on single-agent studies, where one human interacts with one vehicle. Only a few studies incorporate multi-agent setups. This workshop aims to (1) examine the current state of multi-agent research in the automotive domain, (2) serve as a platform for discussion toward more realistic multi-agent setups, and (3) discuss methods and practices to conduct such multi-agent research. The goal is to synthesize the insights from the AutoUI community, creating the foundation for advancing multi-agent traffic interaction research.

-

-

Exploring holistic HMI design for automated vehicles: Insights from participatory workshop to bridge in-vehicle and external communication

Dong, H., Tran, T., Verstegen, R., Cazacu, S., Gao, R., Hoggenmüller, M., Dey, D., Franssen, M., Sasalovici, M., Bazilinskyy, P., Martens. M.

Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems. Honolulu, USA (2024)

ABSTRACT BIB

Human-Machine Interfaces (HMIs) for automated vehicles (AVs) are typically divided into two categories: internal HMIs for interactions within the vehicle, and external HMIs for communication with other road users. In this work, we examine the prospects of bridging these two seemingly distinct domains. Through a participatory workshop with automotive user interface researchers and practitioners, we facilitated a critical exploration of holistic HMI design by having workshop participants collaboratively develop interaction scenarios involving AVs, in-vehicle users, and external road users. The discussion offers insights into the escalation of interface elements as an HMI design strategy, the direct interactions between different users, and an expanded understanding of holistic HMI design. This work reflects a collaborative effort to understand the practical aspects of this holistic design approach, offering new perspectives and encouraging further investigation into this underexplored aspect of automotive user interfaces.

-

-

Putting ChatGPT vision (GPT-4V) to the test: Risk perception in traffic images

Driessen, T., Dodou, D., Bazilinskyy, P., De Winter, J. C. F.

Royal Society Open Science, 11:231676 (2024)

ABSTRACT BIB

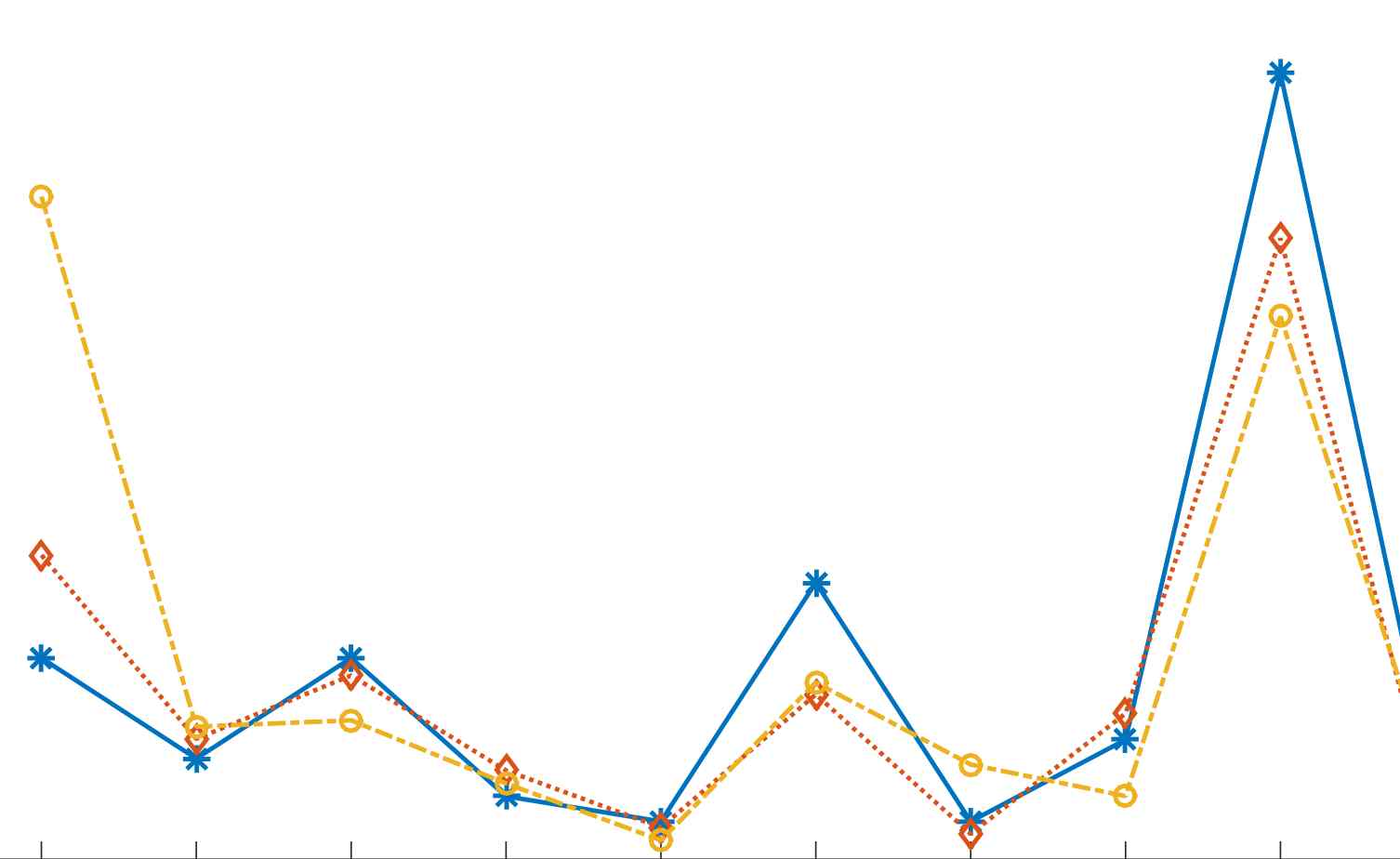

Vision-language models are of interest in various domains, including automated driving, where computer vision techniques can accurately detect road users, but where the vehicle sometimes fails to understand context. This study examined the effectiveness of GPT-4V in predicting the level of ‘risk' in traffic images as assessed by humans. We used 210 static images taken from a moving vehicle, each previously rated by approximately 650 people. Based on psychometric construct theory and using insights from the self-consistency prompting method, we formulated three hypotheses: (i) repeating the prompt under effectively identical conditions increases validity, (ii) varying the prompt text and extracting a total score increases validity compared to using a single prompt, and (iii) in a multiple regression analysis, the incorporation of object detection features, alongside the GPT-4V-based risk rating, significantly contributes to improving the model's validity. Validity was quantified by the correlation coefficient with human risk scores, across the 210 images. The results confirmed the three hypotheses. The eventual validity coefficient was r = 0.83, indicating that population-level human risk can be predicted using AI with a high degree of accuracy. The findings suggest that GPT-4V must be prompted in a way equivalent to how humans fill out a multi-item questionnaire.

-

-

Changing lanes toward open science: Openness and transparency in automotive user research

Ebel, P., Bazilinskyy, P., Colley, M., Goodridge, C. M. , Hock, P., Janssen, C., Sandhaus, H., Srinivasan, A. R., Wintersberger, P.

Adjunct Proceedings of the 16th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutoUI). Stanford, CA, USA (2024)

ABSTRACT BIB

We review the state of open science and the perspectives on open data sharing within the automotive user research community. Openness and transparency are critical not only for judging the quality of empirical research, but also for accelerating scientific progress and promoting an inclusive scientific community. However, there is little documentation of these aspects within the automotive user research community. To address this, we report two studies that identify (1) community perspectives on motivators and barriers to data sharing, and (2) how openness and transparency have changed in papers published at AutomotiveUI over the past 5 years. We show that while open science is valued by the community and openness and transparency have improved, overall compliance is low. The most common barriers are legal constraints and confidentiality concerns. Although research published at AutomotiveUIrelies more on quantitative methods than research published at CHI,openness and transparency are not as well established. Based on our findings, we provide suggestions for improving openness and transparency, arguing that the motivators for open science must outweigh the barriers.

-

-

Exploring the correlation between emotions and uncertainty in daily travel

Franssen, M., Verstegen, R.*, Bazilinskyy, P., Martens, M. H.

Proceedings of International Conference on Applied Human Factors and Ergonomics (AHFE). Nice, France (2024)

ABSTRACT BIB

Our mental state influences how we behave in and interact with the everyday world. Both uncertainty and emotions can alter our mental state and, thus, our behaviour. Although the relationship between uncertainty and emotions has been studied, research into this relationship in the context of daily travel is lacking. Emotions may influence uncertainty, just like uncertainty could trigger emotional responses. In this paper, a study is presented that explores the relationship between uncertainty and emotional states in the context of daily travel. Using a diary study method with 25 participants, emotions and uncertainty that are experienced during daily travel while using multiple modes of transport, were tracked for a period of 14 days. Diary studies allowed us to gain detailed insights and reflections on the emotions and uncertainty that participants experienced during their day-to-day travels. The diary allowed the participants to record their time-sensitive experiences in their relevant context over a longer period. These daily logs were made by the participants in the m-Path application. Participants logged their daily transportation modes, their emotions using the Geneva Emotion Wheel, and the uncertainty that they experienced while travelling. Results show that emotions and uncertainty influence one another simultaneously, with no clear causality. Specifically, this study observed a significant correlation between negative valence emotions (disappointment and fear) and uncertainty, which emphasises the importance of uncertainty and the management of negative valence emotions in travel experiences.

-

-

Incorporating multiple users' perspectives in HMI design for automated vehicles: Exploration of a role-switching approach

Gao, R., Verstegen, R., Dong, H., Bazilinskyy, P., Martens, M. H.

Adjunct Proceedings of the 16th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutoUI). Stanford, CA, USA (2024)

ABSTRACT BIB

Human-machine interfaces (HMIs) are important for the introduction of automated vehicles (AVs). Even though interactions can involve multiple users and modes of transportation, current research and ideation for HMIs are often directed at only one road user group. This reductionist approach goes against the principles of design, which argue for a holistic understanding. To address this gap, we conducted a novel role-switching approach where participants explored a traffic scenario from four roles: pedestrian, cyclist, driver of a manually-driven vehicle and passenger of an AV. After experiencing all roles, participants evaluated each role and generated HMI designs. Results demonstrate that the roles were perceived differently and that switching between these different perspectives contributed to participants' understanding of the traffic scenario and the generated designs. This paper reports insights on the value of a role-switching approach to promote the future development of a more holistic approach towards HMIs.

-

-

Evaluating autonomous vehicle external communication using a multi-pedestrian VR simulator

Tran, T., Parker, C., Yu, X., Dey, D., Martens, M. H., Bazilinskyy, P., Tomitsch, M.

Proceedings of the ACM on Interactive Mobile Wearable and Ubiquitous Technologies 8(2) (2024)

ABSTRACT BIB

With the rise of autonomous vehicles (AVs) in transportation, a pressing concern is their seamless integration into daily life. In multi-pedestrian settings, two challenges emerge: ensuring unambiguous communication to individual pedestrians via external Human–Machine Interfaces (eHMIs), and the influence of one pedestrian over another. We conducted an experiment (N=25) using a multi-pedestrian virtual reality simulator. Participants were paired and exposed to three distinct eHMI concepts: on the vehicle, within the surrounding infrastructure, and on the pedestrian themselves, against a baseline without any eHMI. Results indicate that all eHMI concepts improved clarity of communication over the baseline, but differences in their effectiveness were observed. While pedestrian and infrastructure communications often provided more direct clarity, vehicle-based cues at times introduced uncertainty elements. Furthermore, the study identified the role of co-located pedestrians: in the absence of clear AV communication, individuals frequently sought cues from their peers.

-

-

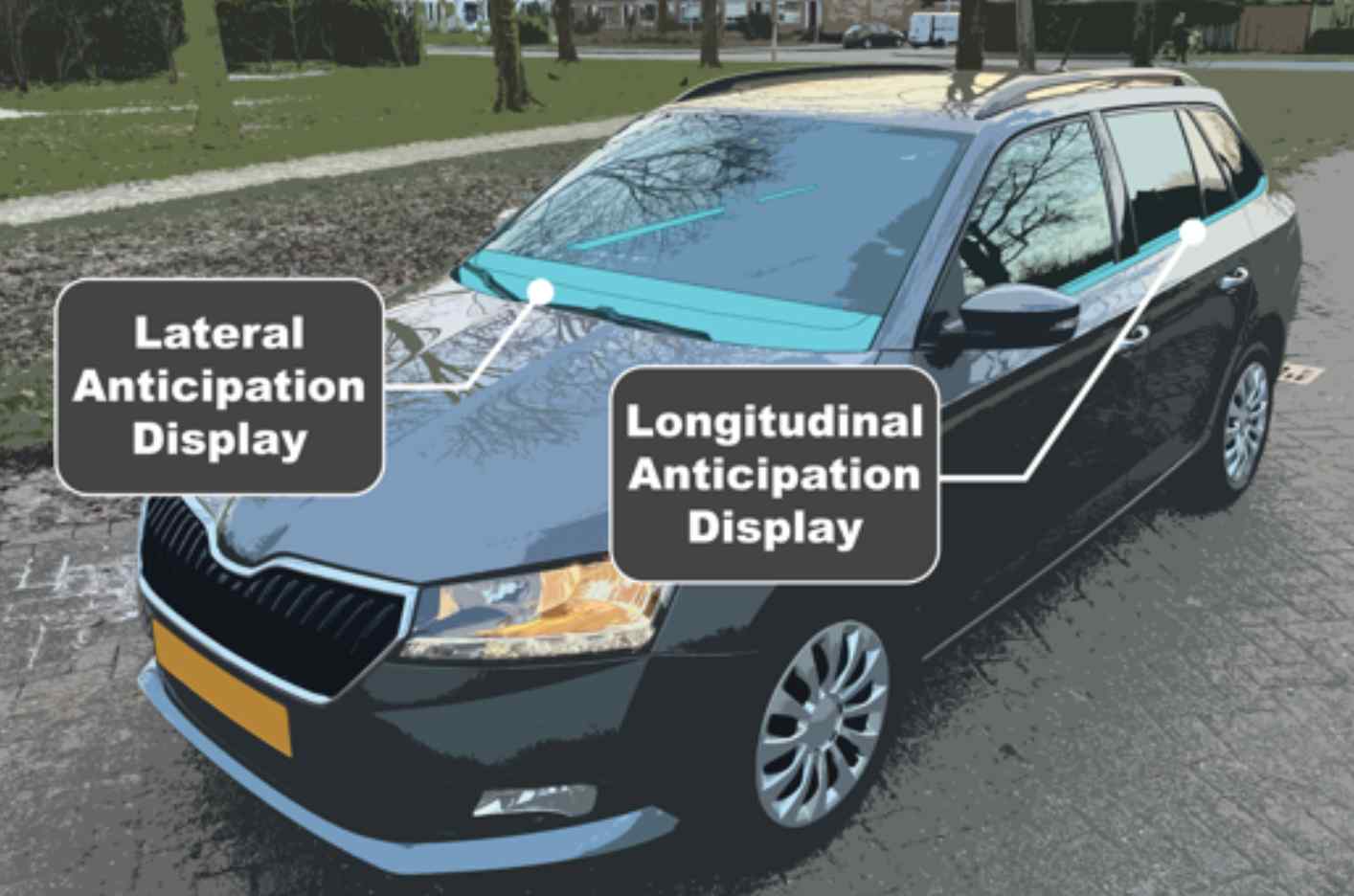

Combining internal and external communication: The design of a holistic Human-Machine Interface for automated vehicles

Versteggen, R., Gao, R., Bernhaupt, R., Bazilinskyy, P., Martens, M. H.

Proceedings of International Conference on Applied Human Factors and Ergonomics (AHFE). Nice, France (2024)

ABSTRACT BIB

In this paper, we explore the field of holistic Human-Machine Interfaces (hHMIs). Currently, internal and external Human-Machine Interfaces are being researched as separate fields. This separation can lead to non-systemic designs that operate in different fashions, make the switch between traffic roles less seamless, and create differences in understanding of a traffic situation, potentially increasing confusion. These factors can limit the adoption of automated vehicles and lead to less seamless interactions in traffic. For this reason, we explore the concept of hHMIs, combining internal and external communication. This paper introduces a working definition for this new type of interface. Then, it explores considerations for the design of such an interface, which are the provision of anticipatory cues, interaction modalities and perceptibility, colour usage, building upon standardisation, and the usage of a singular versus a coupled interface. Then, we apply these considerations with an artefact contribution in the form of an hHMI concept. This interface communicates anticipatory cues in a unified manner to internal and external users of the automated vehicle and demonstrates how these proposed considerations can be applied. By sharing design considerations and a design concept, this paper aims to stimulate the field of holistic Human- Machine Interfaces for automated vehicles.

-

-

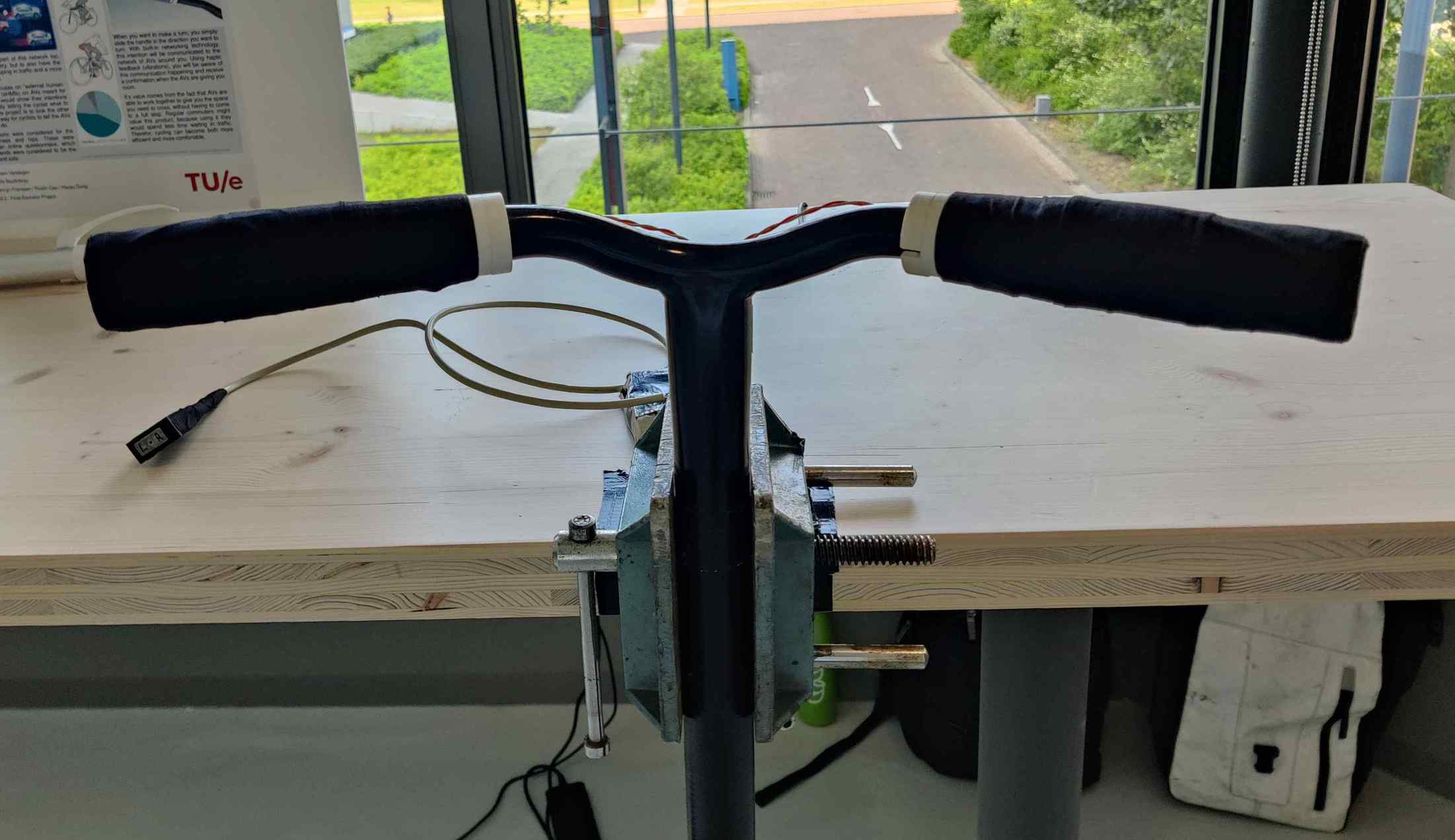

Slideo: Using bicycle-to-vehicle communication to intuitively share intentions to automated vehicles

Versteggen, J., Bazilinskyy, P.

Proceedings of International Conference on Applied Human Factors and Ergonomics (AHFE). Nice, France (2024)

ABSTRACT BIB

In urban environments, cycling is an important method of transportation due to being sustainable, healthy and less space-intensive than motorised traffic. Most literature on interactions between automated vehicles (AVs) and vulnerable road users (VRUs) focuses on external Human-Machine Interfaces positioned on AVs and telling VRUs what to do. Such an interface requires cyclists to actively look for and interpret the information and can reduce their ability to make their own decisions. We designed a physical bicycle-to-vehicle (B2V) interaction that allows cyclists to share the intention to turn with AVs through vehicle-to-everything (V2X) communication. We explored four concepts of interaction with hands, feet, hips, and knees. The final concept uses haptic feedback in each handle. The test with nine participants explored the clarity of the feedback and compared two variations: (1) providing feedback in the beginning, during and at the end and (2) giving feedback only at the beginning and end. Results indicate that the general meaning of both variants is clear and that the preferred variation of feedback is up to personal preference. We suggest that B2V interactions should be possible to personalise.

2023

-

-

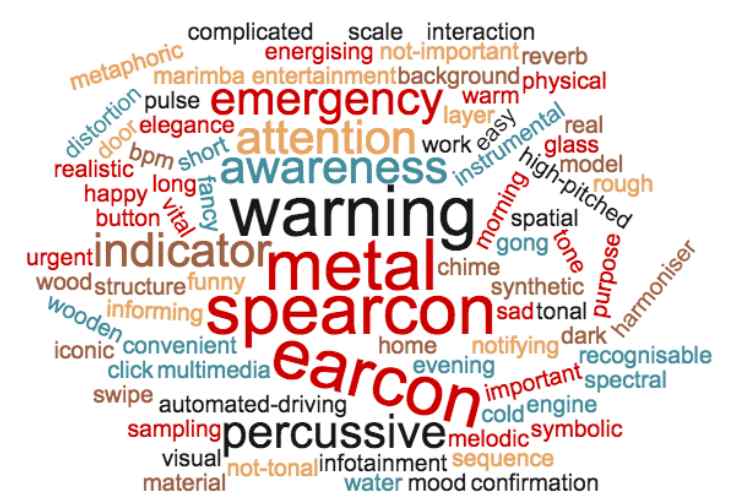

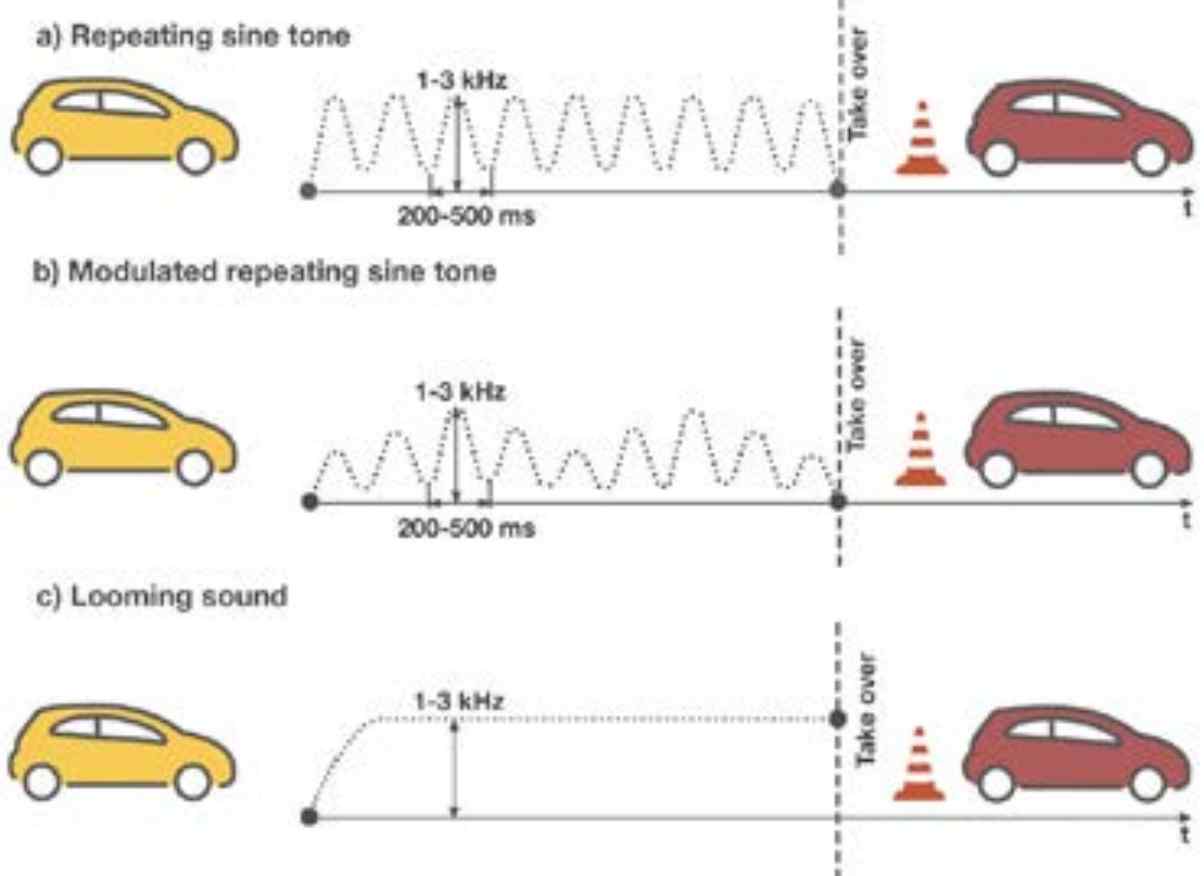

Exterior sounds for electric and automated vehicles: Loud is effective

Bazilinskyy, P., Merino-Martınez, R., Vieirac, E. O., Dodou, D., De Winter, J. C. F.

Applied Acoustics, 214, 109673 (2023)

ABSTRACT BIB

Exterior vehicle sounds have been introduced in electric vehicles and as external human–machine interfaces for automated vehicles. While previous research has studied the effect of exterior vehicle sounds on detectability and acceptance, the present study takes on a different approach by examining the efficacy of such sounds in deterring people from crossing the road. An online study was conducted in which 226 participants were presented with different types of synthetic sounds, including sounds of a combustion engine, pure tones, combined tones, and beeps. Participants were presented with a scenario where a vehicle moved in a straight trajectory at a constant velocity of 30 km/h, without any accompanying visual information. Participants, acting as pedestrians, were asked to hold down a key when they felt safe to cross. After each trial, they assessed whether the vehicle sound was easy to notice, whether it gave enough information to realize that a vehicle was approaching, and whether the sound was annoying. The results showed that sounds of higher modeled perceived loudness, such as continuous tones with high frequency, were the most effective in deterring participants from crossing the road. The tested intermittent beeps resulted in lower crossing deterrence than continuous tones, presumably because no valuable information could be derived during the inter-pulse intervals. Tire noise proved to be effective in deterring participants from crossing while being the least annoying among the sounds tested. These results may prove insightful for the improvement of synthetic exterior vehicle sounds.

-

-

Predicting perceived risk of traffic scenes using computer vision

De Winter, J. C. F., Hoogmoed, J., Stapel, J., Dodou, D., Bazilinskyy, P.

Transportation Research Part F: Traffic Psychology and Behaviour, 84, 194-210 (2023)

ABSTRACT BIB

Perceived risk, or subjective risk, is an important concept in the field of traffic psychology and automated driving. In this paper, we investigate whether perceived risk in images of traffic scenes can be predicted from computer vision features that may also be used by automated vehicles (AVs). We conducted an international crowdsourcing study with 1378 participants, who rated the perceived risk of 100 randomly selected dashcam images on German roads. The population-level perceived risk was found to be statistically reliable, with a split-half reliability of 0.98. We used linear regression analysis to predict (r = 0.62) perceived risk from two features obtained with the YOLOv4 computer vision algorithm: the number of people in the scene and the mean size of the bounding boxes surrounding other road users. When the ego-vehicle’s speed was added as a predictor variable, the prediction strength increased to r = 0.75. Interestingly, the sign of the speed prediction was negative, indicating that a higher vehicle speed was associated with a lower perceived risk. This finding aligns with the principle of self-explaining roads. Our results suggest that computer-vision features and vehicle speed contribute to an accurate prediction of population subjective risk, outperforming the ratings provided by individual participants (mean r = 0.41). These findings may have implications for AV development and the modeling of psychological constructs in traffic psychology.

-

-

Holistic HMI design for automated vehicles: Bridging in-vehicle and external communication

Dong, H., Tran, T., Bazilinskyy, P., Hoggenmueller, M., Dey, D., Cazacu, S., Franssen, M., Gao, R.

Adjunct Proceedings of the 15th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutoUI). Ingolstadt, Germany (2023)

ABSTRACT BIB

As the field of automated vehicles (AVs) advances, it has become increasingly critical to develop human-machine interfaces (HMI) for both internal and external communication. Critical dialogue is emerging around the potential necessity for a holistic approach to HMI unified and coherent experience for different stakeholders interacting with AVs. This workshop seeks to bring together designers, engineers, researchers, and other stakeholders to delve into relevant use cases, exploring the potential advantages and challenges of this approach. The insights generated from this workshop aim to inform further design and research in the development of coherent HMIs for AVs, ultimately for more seamless integration of AVs into existing traffic.

-

-

Breaking barriers: Workshop on open data practices in AutoUI research

Ebel, P., Bazilinskyy, P., Hwang, A., Ju, W., Sandhaus, H., Srinivasan, A., Yang, Q., Wintersberger, P.

Adjunct Proceedings of the 15th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutoUI). Ingolstadt, Germany (2023)

ABSTRACT BIB

While the benefits of open science and open data practices are well understood, experimental data sharing is still uncommon in the AutoUI community. The goal of this workshop is to address the current lack of data sharing practices and to promote a culture of openness. By discussing barriers to data sharing, defining best practices, and exploring open data formats, we aim to foster collaboration, improve data quality, and promote transparency. Special interest groups will be formed to identify parameter sets for recur- ring research topics, so that data collected in different individual studies can be used to generate insights beyond the results of the individual studies. Join us at this workshop to help democratize knowledge and advance research in the AutoUI community.

2022

-

-

Blinded windows and empty driver seats: The effects of automated vehicle characteristics on cyclist decision-making

Bazilinskyy, P., Dodou, D., Eisma, Y. B., Vlakveld, W. V., De Winter, J. C. F.

IET Intelligent Transportation Systems, 17, 1 (2022)

ABSTRACT BIB

Automated vehicles (AVs) may feature blinded (i.e., blacked-out) windows and external Human-Machine Interfaces (eHMIs), and the driver may be inattentive or absent, but how these features affect cyclists is unknown. In a crowdsourcing study, participants viewed images of approaching vehicles from a cyclist’s perspective and decided whether to brake. The images depicted different combinations of traditional versus automated vehicles, eHMI presence, vehicle approach direction, driver visibility/window-blinding, visual complexity of the surroundings, and distance to the cyclist (urgency). The results showed that the eHMI and urgency level had a strong impact on crossing decisions, whereas visual complexity had no significant influence. Blinded windows caused participants to brake for the traditional vehicle. A second crowdsourcing experiment aimed to clarify the findings of Experiment 1 by also requiring participants to detect the vehicle features. It was found that the eHMI ‘GO’ and blinded windows yielded high detection rates and that driver eye contact caused participants to continue pedalling. To conclude, blinded windows increase the probability that cyclists brake, and driver eye contact stimulates cyclists to continue cycling. Our findings, which were obtained with large international samples, may help elucidate how AVs (in which the driver may not be visible) affect cyclists’ behaviour.

-

-

Crowdsourced assessment of 227 text-based eHMIs for a crossing scenario

Bazilinskyy, P., Dodou, D., De Winter, J. C. F.

Proceedings of International Conference on Applied Human Factors and Ergonomics (AHFE). New York, USA (2022)

ABSTRACT BIB

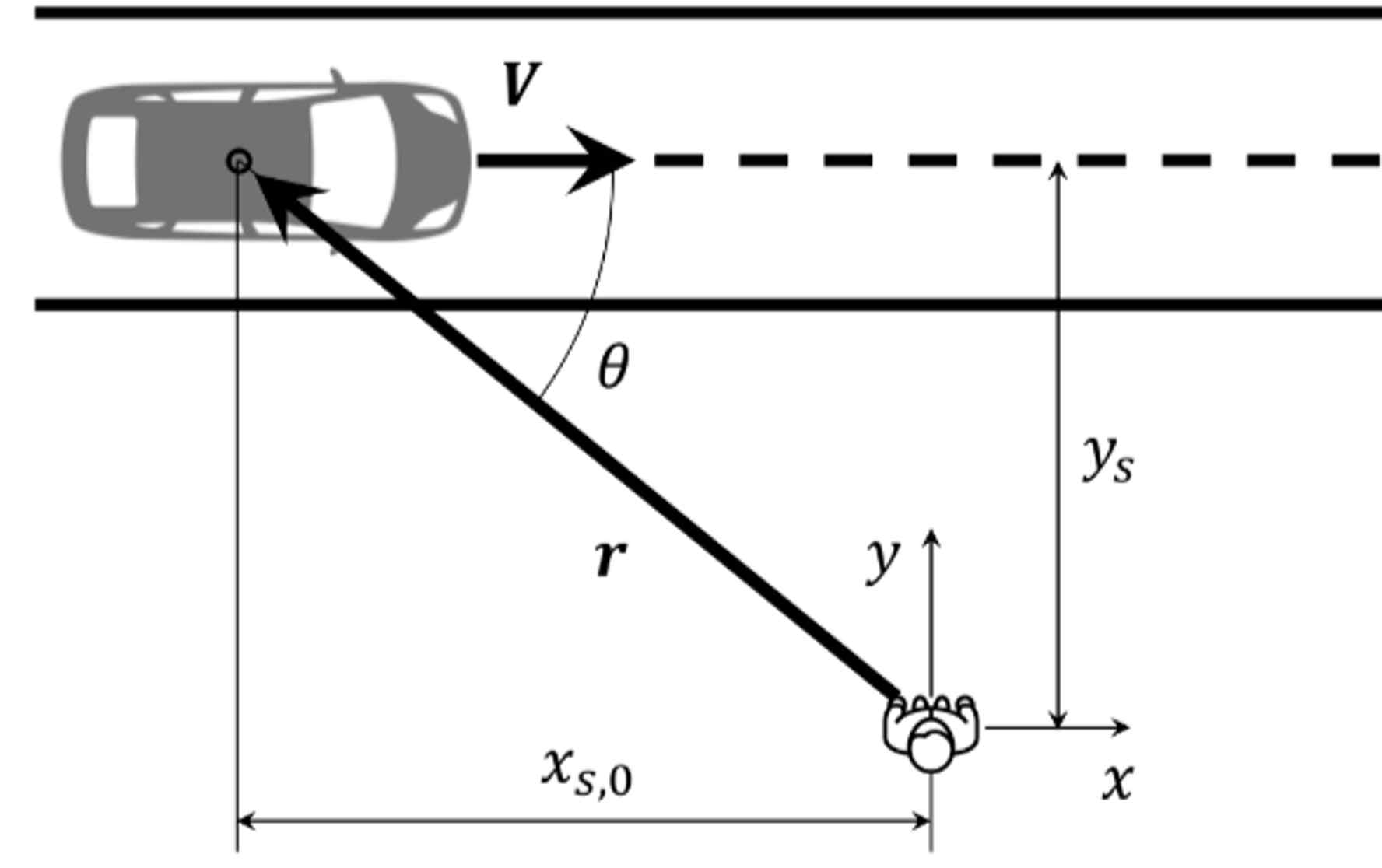

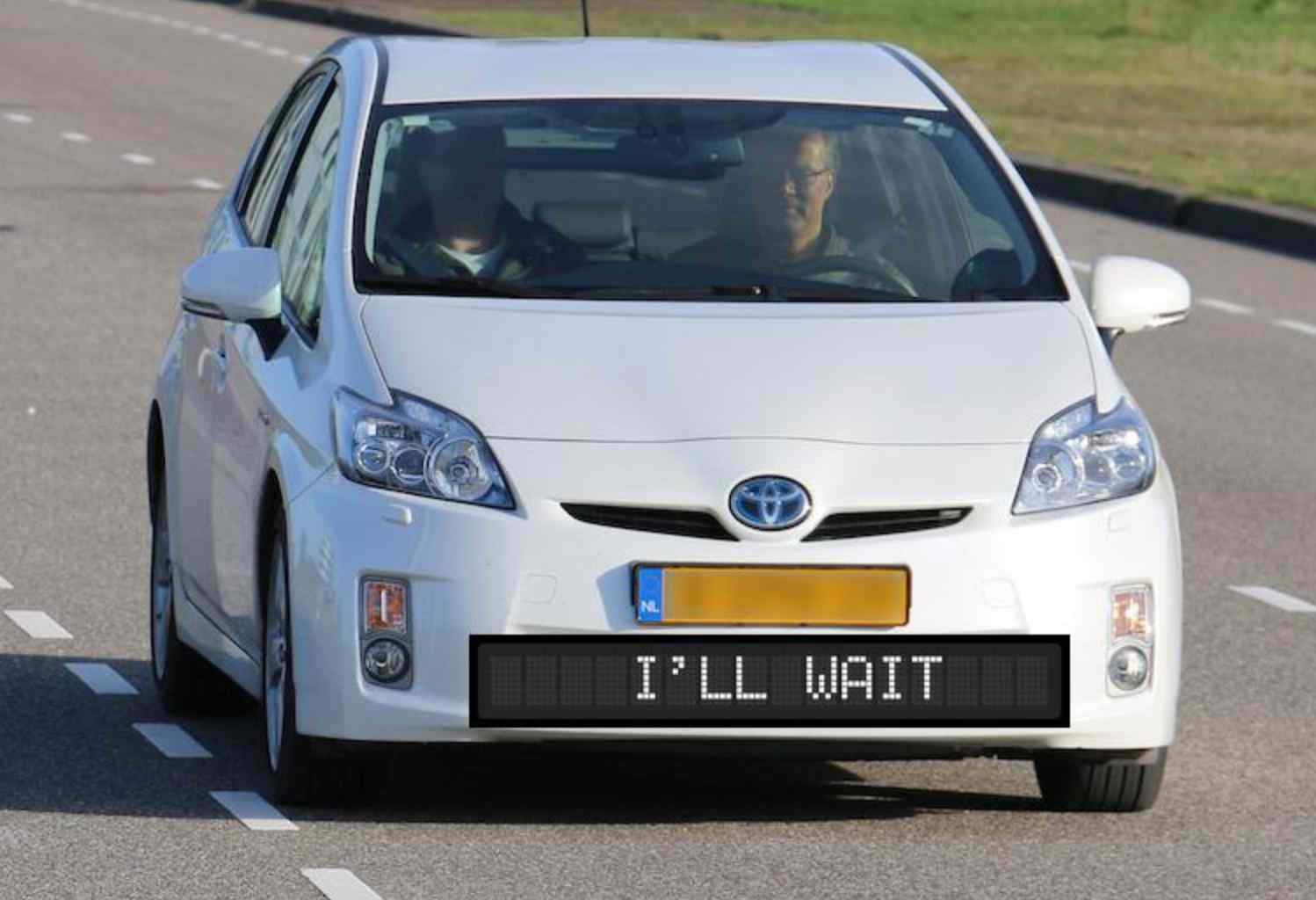

Future automated vehicles may be equipped with external human-machine interfaces (eHMIs) capable of signaling whether pedestrians can cross the road. Industry and academia have proposed a variety of eHMIs featuring a text message. An eHMI message can refer to the action to be performed by the pedestrian (egocentric message) or the automated vehicle (allocentric message). Currently, there is no consensus on the correct phrasing of the text message. We created 227 eHMIs based on text-based eHMIs observed in the literature. A crowdsourcing experiment (N = 1241) was performed with images depicting an automated vehicle equipped with an eHMI on the front bumper. The participants indicated whether they would (not) cross the road, and response times were recorded. Egocentric messages were found to be more compelling for participants to (not) cross than allocentric messages. Furthermore, Spanish-speaking participants found Spanish eHMIs more compelling than English eHMIs. Finally, it was established that some eHMI texts should be avoided, as signified by compellingness, long responses, and high inter-subject variability.

-

-

Get out of the way! Examining eHMIs in critical driver-pedestrian encounters in a coupled simulator

Bazilinskyy, P., Kooijman, L., Dodou, D., Mallant, K. P. T., Roosens, V. E. R., Middelweerd, M. D. L. M., Overbeek, L. D., De Winter, J. C. F.

Proceedings of International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutoUI) (2022)

ABSTRACT BIB

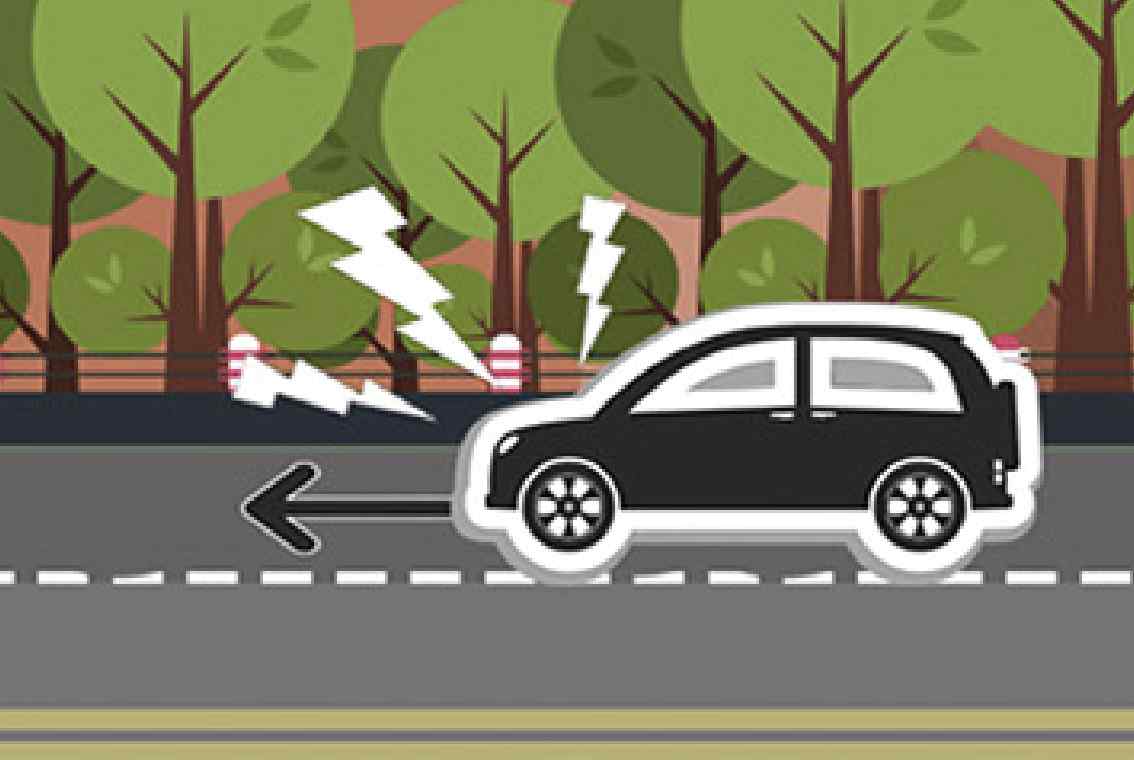

Past research suggests that displays on the exterior of the car, known as eHMIs, can be effective in helping pedestrians to make safe crossing decisions. This study examines a new application of eHMIs, namely the provision of directional information in scenarios where the pedestrian is almost hit by a car. In an experiment using a head-mounted display and a motion suit, participants had to cross the road while a car driven by another participant approached them. The results showed that the directional eHMI caused pedestrians to step back compared to no eHMI. The eHMI increased the pedestrians’ self-reported understanding of the car’s intention, although some pedestrians did not notice the eHMI. In conclusion, there may be potential for supporting pedestrians in situations where they need support the most, namely critical encounters. Future research may consider coupling a directional eHMI to autonomous emergency steering.

-

-

Identifying lane changes automatically using the GPS sensors of portable devices

Driessen, T., Prasad, L., Bazilinskyy, P., De Winter, J. C. F.

Proceedings of International Conference on Applied Human Factors and Ergonomics (AHFE). New York, USA (2022)

ABSTRACT BIB

Mobile applications that provide GPS-based route navigation advice or driver diagnostics are gaining popularity. However, these applications currently do not have knowledge of whether the driver is performing a lane change. Having such information may prove valuable to individual drivers (e.g., to provide more specific navigation instructions) or road authorities (e.g., knowledge of lane change hotspots may inform road design). The present study aimed to assess the accuracy of lane change recognition algorithms that rely solely on mobile GPS sensor input. Three trips on Dutch highways, totaling 158 km of driving, were performed while carrying two smartphones (Huawei P20, Samsung Galaxy S9), a GPS-equipped GoPro Max, and a USB GPS receiver (GlobalSat BU343-s4). The timestamps of all 215 lane changes were manually extracted from the forward-facing GoPro camera footage, and used as ground truth. After connecting the GPS trajectories to the road using Mapbox Map Matching API (2022), lane changes were identified based on the exceedance of a lateral translation threshold in set time windows. Different thresholds and window sizes were tested for their ability to discriminate between a pool of lane change segments and an equally-sized pool of no-lane-change segments. The overall accuracy of the lane-change classification was found to be 90%. The method appears promising for highway engineering and traffic behavior research that use floating car data, but there may be limited applicability to real-time advisory systems due to the occasional occurrence of false positives.

-

-

Stopping by looking: A driver-pedestrian interaction study in a coupled simulator using head-mounted displays with eye-tracking

Mok, C. S., Bazilinskyy, P., De Winter, J. C. F.

Applied Ergonomics, 105, 103825 (2022)

ABSTRACT BIB

Automated vehicles (AVs) can perform low-level control tasks but are not always capable of proper decision-making. This paper presents a concept of eye-based maneuver control for AV-pedestrian interaction. Previously, it was unknown whether the AV should conduct a stopping maneuver when the driver looks at the pedestrian or looks away from the pedestrian. A two-agent experiment was conducted using two head-mounted displays with integrated eye-tracking. Seventeen pairs of participants (pedestrian and driver) each interacted in a road crossing scenario. The pedestrians' task was to hold a button when they felt safe to cross the road, and the drivers' task was to direct their gaze according to instructions. Participants completed three 16-trial blocks: (1) Baseline, in which the AV was pre-programmed to yield or not yield, (2) Look to Yield (LTY), in which the AV yielded when the driver looked at the pedestrian, and (3) Look Away to Yield (LATY), in which the AV yielded when the driver did not look at the pedestrian. The driver's eye movements in the LTY and LATY conditions were visualized using a virtual light beam. Crossing performance was assessed based on whether the pedestrian held the button when the AV yielded and released the button when the AV did not yield. Furthermore, the pedestrians' and drivers' acceptance of the mappings was measured through a questionnaire. The results showed that the LTY and LATY mappings yielded better crossing performance than Baseline. Furthermore, the LTY condition was best accepted by drivers and pedestrians. Eye-tracking analyses indicated that the LTY and LATY mappings attracted the pedestrian's attention, while pedestrians still distributed their attention between the AV and a second vehicle approaching from the other direction. In conclusion, LTY control may be a promising means of AV control at intersections before full automation is technologically feasible.

-

-

The effect of drivers’ eye contact on pedestrians’ perceived safety

Onkhar, V., Bazilinskyy, P., Dodou, D., De Winter, J. C. F.

Transportation Research Part F: Traffic Psychology and Behaviour, 84, 194-210 (2022)

ABSTRACT BIB

Many fatal accidents that involve pedestrians occur at road crossings, and are attributed to a breakdown of communication between pedestrians and drivers. Thus, it is important to investigate how forms of communication in traffic, such as eye contact, influence crossing decisions. Thus far, there is little information about the effect of drivers’ eye contact on pedestrians’ perceived safety to cross the road. Existing studies treat eye contact as immutable, i.e., it is either present or absent in the whole interaction, an approach that overlooks the effect of the timing of eye contact. We present an online crowdsourced study that addresses this research gap. 1835 participants viewed 13 videos of an approaching car twice, in random order, and held a key whenever they felt safe to cross. The videos differed in terms of whether the car yielded or not, whether the car driver made eye contact or not, and the times when the driver made eye contact. Participants also answered questions about their perceived intuitiveness of the driver’s eye contact behavior. The results showed that eye contact made people feel considerably safer to cross compared to no eye contact (an increase in keypress percentage from 31% to 50% was observed). In addition, the initiation and termination of eye contact affected perceived safety to cross more strongly than continuous eye contact and a lack of it, respectively. The car’s motion, however, was a more dominant factor. Additionally, the driver’s eye contact when the car braked was considered intuitive, and when it drove off, counterintuitive. In summary, this study demonstrates for the first time how drivers’ eye contact affects pedestrians’ perceived safety as a function of time in a dynamic scenario and questions the notion in recent literature that eye contact in road interactions is dispensable. These findings may be of interest in the development of automated vehicles (AVs), where the driver of the AV might not always be paying attention to the environment.

2021

-

-

What driving style makes pedestrians think a passing vehicle is driving automatically?

Bazilinskyy, P., Sakuma, T., De Winter, J. C. F.

Applied Ergonomics, 95, 103428 (2021)

ABSTRACT BIB

An important question in the development of automated vehicles (AVs) is which driving style AVs should adopt and how other road users perceive them. The current study aimed to determine which AV behaviours contribute to pedestrians’ judgements as to whether the vehicle is driving manually or automatically as well as judgements of likeability. We tested five target trajectories of an AV in curves: playback manual driving, two stereotypical automated driving conditions (road centre tendency, lane centre tendency), and two stereotypical manual driving conditions, which slowed down for curves and cut curves. In addition, four braking patterns for approaching a zebra crossing were tested: manual braking, stereotypical automated driving (fixed deceleration), and two variations of stereotypical manual driving (sudden stop, crawling forward). The AV was observed by 24 participants standing on the curb of the road in groups. After each passing of the AV, participants rated whether the car was driven manually or automatically, and the degree to which they liked the AV’s behaviour. Results showed that the playback manual trajectory was considered more manual than the other trajectory conditions. The stereotype automated ‘road centre tendency’ and ‘lane centre tendency’ trajectories received similar likeability ratings as the playback manual driving. An analysis of written comments showed that curve cutting was a reason to believe the car is driving manually, whereas driving at a constant speed or in the centre was associated with automated driving. The sudden stop was the least likeable way to decelerate, but there was no consensus on whether this behaviour was manual or automated. It is concluded that AVs do not have to drive like a human in order to be liked.

-

-

How should external Human-Machine Interfaces behave? Examining the effects of colour, position, message, activation distance, vehicle yielding, and visual distraction among 1,434 participants

Bazilinskyy, P., Kooijman, L., Dodou, D., De Winter, J. C. F.

Applied Ergonomics, 95, 103450 (2021)

ABSTRACT BIB

External human-machine interfaces (eHMIs) may be useful for communicating the intention of an automated vehicle (AV) to a pedestrian, but it is unclear which eHMI design is most effective. In a crowdsourced experiment, we examined the effects of (1) colour (red, green, cyan), (2) position (roof, bumper, windshield), (3) message (WALK, DON'T WALK, WILL STOP, WON'T STOP, light bar), (4) activation distance (35 or 50 m from the pedestrian), and (5) the presence of visual distraction in the environment, on pedestrians' perceived safety of crossing the road in front of yielding and non-yielding AVs. Participants (N = 1434) had to press a key when they felt safe to cross while watching a random 40 out of 276 videos of an approaching AV with eHMI. Results showed that (1) green and cyan eHMIs led to higher perceived safety of crossing than red eHMIs; no significant difference was found between green and cyan, (2) eHMIs on the bumper and roof were more effective than eHMIs on the windshield, (3) for yielding AVs, perceived safety was higher for WALK compared to WILL STOP, followed by the light bar; for non-yielding AVs, a red bar yielded similar results to red text, (4) for yielding AVs, a red bar caused lower perceived safety when activated early compared to late, whereas green/cyan WALK led to higher perceived safety when activated late compared to early, and (5) distraction had no significant effect. We conclude that people adopt an egocentric perspective, that the windshield is an ineffective position, that the often-recommended colour cyan may have to be avoided, and that eHMI activation distance has intricate effects related to onset saliency.

-

-

Visual attention of pedestrians in traffic scenes: A crowdsourcing experiment

Bazilinskyy, P., Kyriakidis, M., De Winter, J. C. F.

Proceedings of International Conference on Applied Human Factors and Ergonomics (AHFE). New York, USA (2021)

ABSTRACT BIB

In a crowdsourced experiment, the effects of distance and type of the approaching vehicle, traffic density, and visual clutter on pedestrians’ attention distribution were explored. 965 participants viewed 107 images of diverse traffic scenes for durations between 100 and 4000 ms. Participants’ eye-gaze data were collected using the TurkEyes method. The method involved briefly showing codecharts after each image and asking the participants to type the code they saw last. The results indicate that automated vehicles were more often glanced at than manual vehicles. Measuring eye gaze without an eye tracker is promising.

-

-

How do pedestrians distribute their visual attention when walking through a parking garage? An eye-tracking study

De Winter, J. C. F., Bazilinskyy, P., Wesdorp, D., De Vlam, V., Hopmans, B., Visscher, J., Dodou, D.

Ergonomics, 64, 793–805 (2021)

ABSTRACT BIB

We examined what pedestrians look at when walking through a parking garage. Thirty-six participants walked a short route in a floor of a parking garage while their eye movements and head rotations were recorded with a Tobii Pro Glasses 2 eye-tracker. The participants’ fixations were then classified into 14 areas of interest. The results showed that pedestrians often looked at the back (20.0%), side (7.5%), and front (4.2%) of parked cars, and at approaching cars (8.8%). Much attention was also paid to the ground (20.1%). The wheels of cars (6.8%) and the driver in approaching cars (3.2%) received attention as well. In conclusion, this study showed that eye movements are largely functional in the sense that they appear to assist in safe navigation through the parking garage. Pedestrians look at a variety of sides and features of the car, suggesting that displays on future automated cars should be omnidirectionally visible.

-

-

Towards the detection of driver–pedestrian eye contact

Onkhar, V., Bazilinskyy, P., Stapel, J. C. J., Dodou, D., Gavrila, D., De Winter, J. C. F.

Pervasive and Mobile Computing, 76, 101455 (2021)

ABSTRACT BIB

Non-verbal communication, such as eye contact between drivers and pedestrians, has been regarded as one way to reduce accident risk. So far, studies have assumed rather than objectively measured the occurrence of eye contact. We address this research gap by developing an eye contact detection method and testing it in an indoor experiment with scripted driver-pedestrian interactions at a pedestrian crossing. Thirty participants acted as a pedestrian either standing on an imaginary curb or crossing an imaginary one-lane road in front of a stationary vehicle with an experimenter in the driver’s seat. In half of the trials, pedestrians were instructed to make eye contact with the driver; in the other half, they were prohibited from doing so. Both parties’ gaze was recorded using eye trackers. An in-vehicle stereo camera recorded the car’s point of view, a head-mounted camera recorded the pedestrian’s point of view, and the location of the driver’s and pedestrian’s eyes was estimated using image recognition. We demonstrate that eye contact can be detected by measuring the angles between the vector joining the estimated location of the driver’s and pedestrian’s eyes, and the pedestrian’s and driver’s instantaneous gaze directions, respectively, and identifying whether these angles fall below a threshold of 4°. We achieved 100% correct classification of the trials involving eye contact and those without eye contact, based on measured eye contact duration. The proposed eye contact detection method may be useful for future research into eye contact.

-

-

Bio-inspired intent communication for automated vehicles

Oudshoorn, M. P. J., De Winter, J. C. F., Bazilinskyy, P., Dodou, D.

Transportation Research Part F: Traffic Psychology and Behaviour, 80, 127-140 (2021)

ABSTRACT BIB

Various external human-machine interfaces (eHMIs) have been proposed that communicate the intent of automated vehicles (AVs) to vulnerable road users. However, there is no consensus on which eHMI concept is most suitable for intent communication. In nature, animals have evolved the ability to communicate intent via visual signals. Inspired by intent communication in nature, this paper investigated three novel and potentially intuitive eHMI designs that rely on posture, gesture, and colouration, respectively. In an online crowdsourcing study, 1141 participants viewed videos featuring a yielding or non-yielding AV with one of the three bio-inspired eHMIs, as well as a green/red lightbar eHMI, a walk/-don't walk text-based eHMI, and a baseline condition (i.e., no eHMI). Participants were asked to press and hold a key when they felt safe to cross and to answer rating questions. Together, these measures were used to determine the intuitiveness of the tested eHMIs. Results showed that the lightbar eHMI and text-based eHMI were more intuitive than the three bio-inspired eHMIs, which, in turn, were more intuitive than the baseline condition. An exception was the bio-inspired colouration eHMI, which produced a performance score that was equivalent to the text-based eHMI when communicating 'non-yielding'. Further research is necessary to examine whether these observations hold in more complex traffic situations. Additionally, we recommend combining features from different eHMIs, such as the full-body communication of the bio-inspired colouration eHMI with the colours of the lightbar eHMI.

-

-

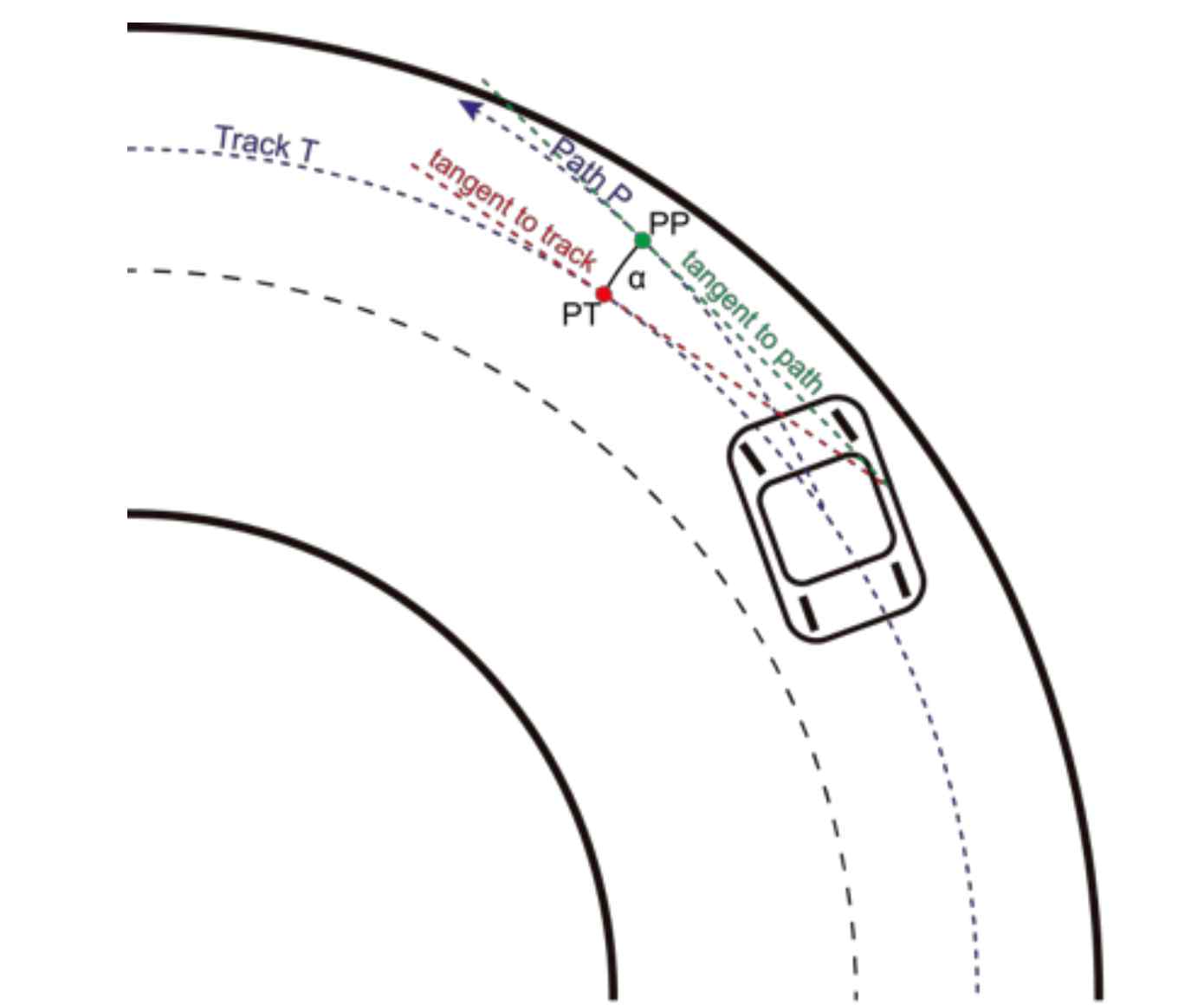

Automated vehicles that communicate implicitly: Examining the use of lateral position within the lane

Sripada, A., Bazilinskyy, P., De Winter, J. C. F.

Ergonomics, 1–13 (2021)

ABSTRACT BIB

It may be necessary to introduce new modes of communication between automated vehicles (AVs) and pedestrians. This research proposes using the AV’s lateral deviation within the lane to communicate if the AV will yield to the pedestrian. In an online experiment, animated video clips depicting an approaching AV were shown to participants. Each of 1104 participants viewed 28 videos twice in random order. The videos differed in deviation magnitude, deviation onset, turn indicator usage, and deviation-yielding mapping. Participants had to press and hold a key as long as they felt safe to cross, and report the perceived intuitiveness of the AV’s behaviour after each trial. The results showed that the AV moving towards the pedestrian to indicate yielding and away to indicate continuing driving was more effective than the opposite combination. Furthermore, the turn indicator was regarded as intuitive for signalling that the AV will yield. Practitioner summary: Future automated vehicles (AVs) may have to communicate with vulnerable road users. Many researchers have explored explicit communication via text messages and led strips on the outside of the AV. The present study examines the viability of implicit communication via the lateral movement of the AV.

2020

-

-

Coupled simulator for research on the interaction between pedestrians and (automated) vehicles

Bazilinskyy, P., Kooijman, L.*, De Winter, J. C. F.

Proceedings of Driving Simulation Conference (DSC). Antibes, France (2020)

ABSTRACT BIB

Driving simulators are regarded as valuable tools for human factors research on automated driving and traffic safety. However, simulators that enable the study of human-human interactions are rare. In this study, we present an open-source coupled simulator developed in Unity. The simulator supports input from head-mounted displays, motion suits, and game controllers. It facilitates research on interactions between pedestrians and humans inside manual and automated vehicles. We present results of a demo experiment on the interaction between a passenger in an automated car equipped with an external human-machine interface, a driver of a manual car, and a pedestrian. We conclude that the newly developed open-source coupled simulator is a promising tool for future human factors research.

-

-

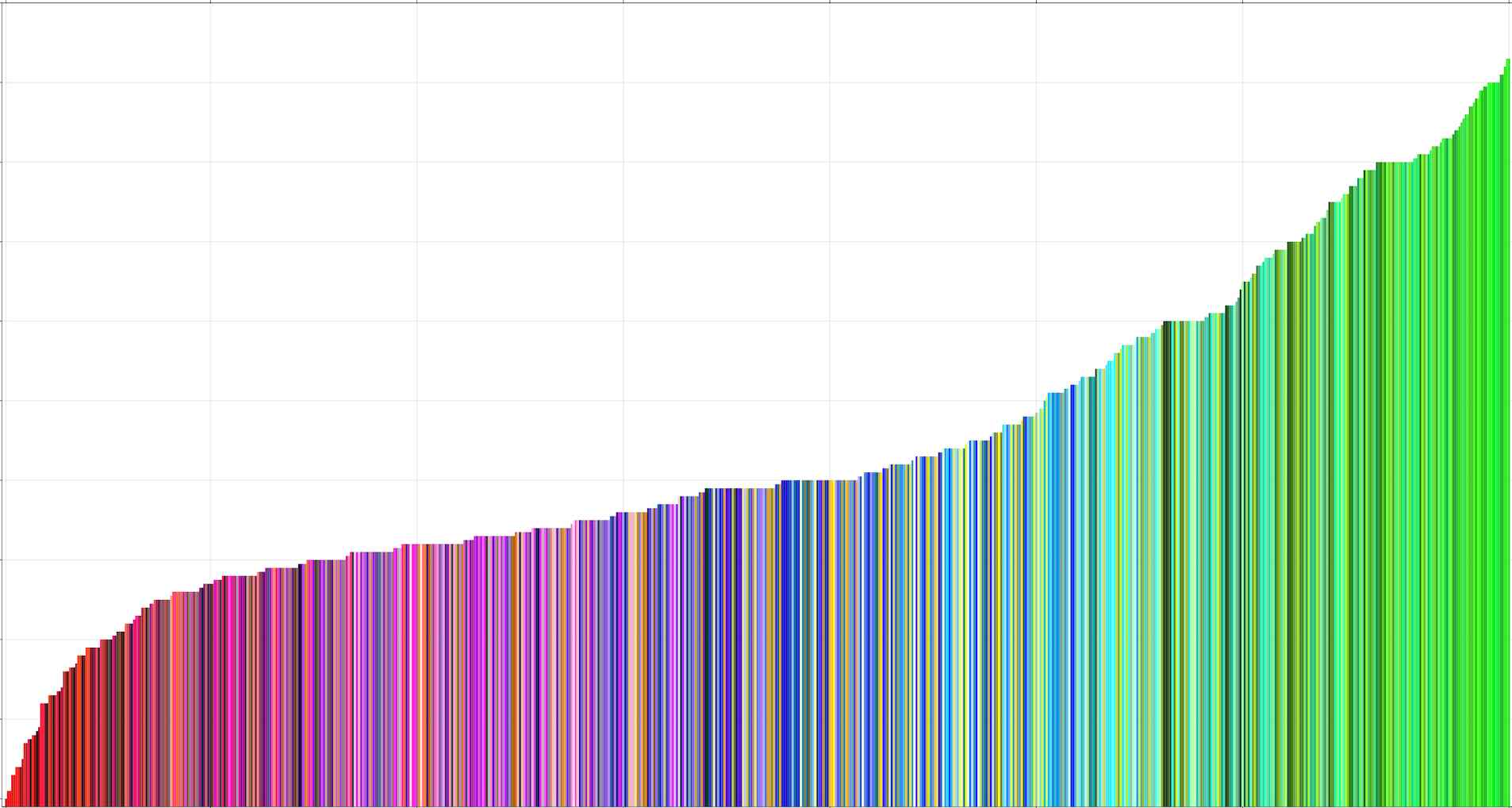

External Human-Machine Interfaces: Which of 729 colors is best for signaling ‘Please (do not) Cross’?

Bazilinskyy, P., Dodou, D., De Winter, J. C. F.

Proceedings of IEEE International Conference on Systems, Man, and Cybernetics (SMC). Toronto, Canada (2020)

ABSTRACT BIB

Future automated vehicles may be equipped with external human-machine interfaces (eHMIs) capable of signaling to pedestrians whether or not they can cross the road. There is currently no consensus on the correct colors for eHMIs. Industry and academia have already proposed a variety of eHMI colors, including red and green, as well as colors that are said to be neutral, such as cyan. A confusion that can arise with red and green is whether the color refers to the pedestrian (egocentric perspective) or the automated vehicle (allocentric perspective). We conducted two crowdsourcing experiments (N = 2000 each) with images depicting an automated vehicle equipped with an eHMI in the form of a rectangular display on the front bumper. The eHMI had one out of 729 colors from the RGB spectrum. In Experiment 1, participants rated the intuitiveness of a random subset of 100 of these eHMIs for signaling 'please cross the road', and in Experiment 2 for 'please do NOT cross the road'. The results showed that for 'please cross', bright green colors were considered the most intuitive. For 'please do NOT cross', red colors were rated as the most intuitive, but with high standard deviations among participants. In addition, some participants rated green colors as intuitive for 'please do NOT cross'. Results were consistent for men and women and for colorblind and non-colorblind persons. It is concluded that eHMIs should be green if the eHMI is intended to signal 'please cross', but green and red should be avoided if the eHMI is intended to signal 'please do NOT cross'. Various neutral colors can be used for that purpose, including cyan, yellow, and purple.

-

-

Risk perception: A study using dashcam videos and participants from different world regions.

Bazilinskyy, P., Eisma, Y. B., Dodou, D., De Winter, J. C. F.

Traffic Injury Prevention, 21, 347–353 (2020)

ABSTRACT BIB

Objective: Research has shown that perceived risk is a vital variable in the understanding of road traffic safety. Having experience in a particular traffic environment can be expected to affect perceived risk. More specifically, drivers may readily recognize traffic hazards when driving in their own world region, resulting in high perceived risk (the expertise hypothesis). Oppositely, drivers may be desensitized to traffic hazards that are common in their own world region, resulting in low perceived risk (the desensitization hypothesis). This study investigated whether participants experienced higher or lower perceived risk for traffic situations from their region compared to traffic situations from other regions. Methods: In a crowdsourcing experiment, participants viewed dashcam videos from four regions: India, Venezuela, United States, and Western Europe. Participants had to press a key when they felt the situation was risky. Results: Data were obtained from 800 participants, with 52 participants from India, 75 from Venezuela, 79 from the United States, 32 from Western Europe, and 562 from other countries. The results provide support for the desensitization hypothesis. For example, participants from India perceived low risk for hazards (e.g., a stationary car on the highway) that were perceived as risky by participants from other regions. At the same time, support for the expertise hypothesis was obtained, as participants in some cases detected hazards that were specific to their own region (e.g., participants from Venezuela detected inconspicuous roadworks in a Venezuelan city better than did participants from other regions). Conclusion: We found support for the desensitization hypothesis and the expertise hypothesis. These findings have implications for cross-cultural hazard perception research.

2019

-

-

Blind driving by means of the track angle error

Bazilinskyy, P., Bijker, L., Dielissen, T., French, S., Mooijman, T., Peters, L., De Winter, J. C. F.

Proceedings of International Congress on Sound and Vibration (ICSV). Montreal, Canada (2019)

ABSTRACT BIB

This study is the third iteration in a series of studies aimed to develop a system that allows driving blindfolded. We used a sonification approach, where the predicted angular error of the car 2 seconds into the future was translated into spatialized beeping sounds. In a driving simulator experiment, we tested with 20 participants whether a surround-sound feedback system that uses four speakers yields better lane-keeping performance than binary directional feedback produced by two speakers. We also examined whether adding a corner support system to the binary system improves lane-keeping performance. Compared to the two previous iterations, this study presents a more realistic experimental setting, as participants were unfamiliar with the feedback system and received the feedback without headphones. The results show that participants had poor lane-keeping performance. Furthermore, the driving task was perceived as demanding, especially in the case of the additional corner support. Our findings from the blind driving projects suggest that drivers benefit from simple auditory feedback; additional auditory stimuli (e.g., corner support) add workload without improving performance.

-

-

Continuous auditory feedback on the status of adaptive cruise control, lane deviation, and time headway: An acceptable support for truck drivers?

Bazilinskyy, P., Larsson, P., Johansson, E., De Winter, J. C. F.

Acoustical Science and Technology, 40, 382–390 (2019)

ABSTRACT BIB

The number of trucks that are equipped with driver assistance systems is increasing. These driver assistance systems typically offer binary auditory warnings or notifications upon lane departure, close headway, or automation (de)activation. Such binary sounds may annoy the driver if presented frequently. Truck drivers are well accustomed to the sound of the engine and wind in the cabin. Based on the premise that continuous sounds are more natural than binary warnings, we propose continuous auditory feedback on the status of adaptive cruise control, lane offset, and headway, which blends with the engine and wind sounds that are already present in the cabin. An on-road study with 23 truck drivers was performed, where participants were presented with the additional sounds in isolation from each other and in combination. Results showed that the sounds were easy to understand and that the lane-offset sound was regarded as somewhat useful. Systems with feedback on the status of adaptive cruise control and headway were seen as not useful. Participants overall preferred a silent cabin and expressed displeasure with the idea of being presented with extra sounds on a continuous basis. Suggestions are provided for designing less intrusive continuous auditory feedback.

-

-

Survey on eHMI concepts: The effect of text, color, and perspective

Bazilinskyy, P., Dodou, D., De Winter, J. C. F.

Transportation Research Part F: Traffic Psychology and Behaviour, 67, 175-194 (2019)

ABSTRACT BIB

The automotive industry has presented a variety of external human-machine interfaces (eHMIs) for automated vehicles (AVs). However, there appears to be no consensus on which types of eHMIs are clear to vulnerable road users. Here, we present the results of two large crowdsourcing surveys on this topic. In the first survey, we asked respondents about the clarity of 28 images, videos, and patent drawings of eHMI concepts presented by the automotive industry. Results showed that textual eHMIs were generally regarded as the clearest. Among the non-textual eHMIs, a projected zebra crossing was regarded as clear, whereas light-based eHMIs were seen as relatively unclear. A considerable proportion of the respondents mistook non-textual eHMIs for a sensor. In the second survey, we examined the effect of perspective of the textual message (egocentric from the pedestrian's point of view: 'Walk', 'Don't walk' vs. allocentric: 'Will stop', 'Won't stop') and color (green, red, white) on whether respondents felt safe to cross in front of the AV. The results showed that textual eHMIs were more persuasive than color-only eHMIs, which is in line with the results from the first survey. The eHMI that received the highest percentage of 'Yes' responses was the message 'Walk' in green font, which points towards an egocentric perspective taken by the pedestrian. We conclude that textual egocentric eHMIs are regarded as clearest, which poses a dilemma because textual instructions are associated with practical issues of liability, legibility, and technical feasibility.

-

-

When will most cars drive fully automatically? An analysis of international surveys

Bazilinskyy, P., Kyriakidis, M., De Winter, J. C. F.

Transportation Research Part F: Traffic Psychology and Behaviour, 64, 184-195 (2019)

ABSTRACT BIB

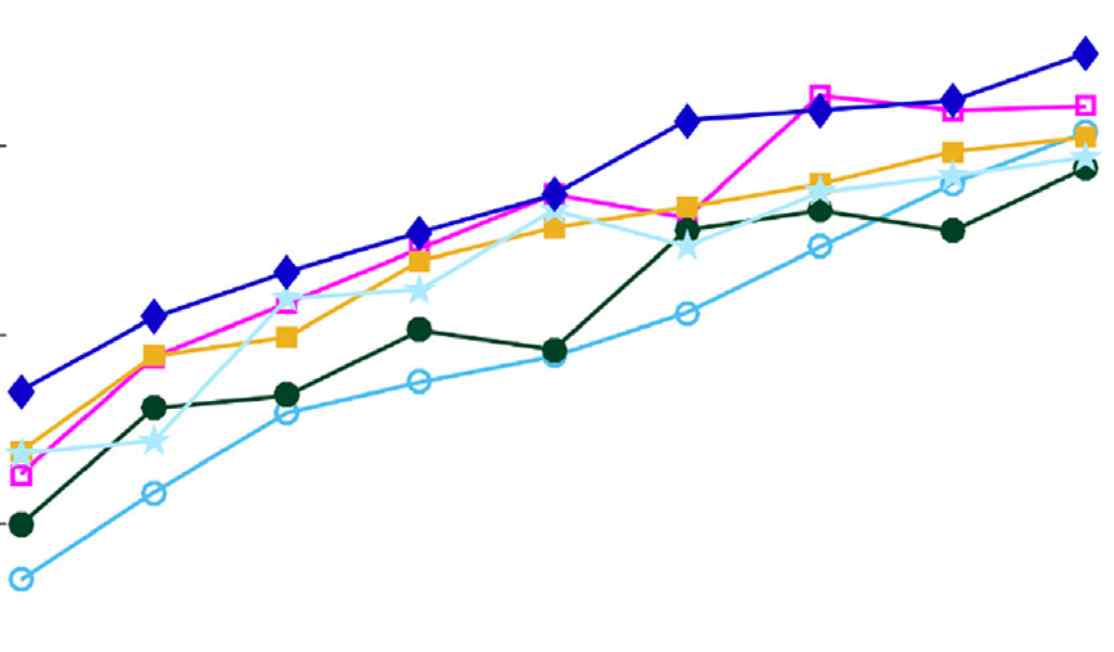

When fully automated cars will be widespread is a question that has attracted considerable attention from futurists, car manufacturers, and academics. This paper aims to poll the public’s expectations regarding the deployment of fully automated cars. In 15 crowdsourcing surveys conducted between June 2014 and January 2019, we obtained answers from 18,970 people in 128 countries regarding when they think that most cars will be able to drive fully automatically in their country of residence. The median reported year was 2030. The later the survey date, the smaller the percentage of respondents who reported that most cars would be able to drive fully automatically by 2020, with 15–22% of the respondents providing this estimate in the surveys conducted between 2014 and 2016 versus 3–5% in the 2018 surveys. Respondents who completed multiple surveys were more likely to revise their estimate upward (39.4%) than downward (35.3%). Correlational analyses showed that people from more affluent countries and people who have heard of the Google Driverless Car (Waymo) or the Tesla Autopilot reported a significantly earlier year. Finally, we made a comparison between the crowdsourced respondents and respondents from a technical university who answered the same question; the median year reported by the latter group was 2040. We conclude that over the course of 4.5 years the public has moderated its expectations regarding the penetration of fully automated cars but remains optimistic compared to what experts currently believe.

2018

-

-

An auditory dataset of passing vehicles recorded with a smartphone

Bazilinskyy, P., Van der Aa, A., Schoustra, M., Spruit, J., Staats, L., Van der Vlist, K. J., De Winter, J. C. F.

Proceedings of Tools and Methods of Competitive Engineering (TMCE). Las Palmas de Gran Canaria, Spain (2018)

ABSTRACT BIB

The increase of smartphones over the past decade has contributed to distraction in traffic. However, smartphones could potentially be turned into an advantage by being able to detect whether a motorized vehicle is passing the smartphone user (e.g., a pedestrian or cyclist). Herein, we present a dataset of audio recordings of passing vehicles, made with a smartphone. Recordings were made of a passing passenger car and a scooter in various conditions (windy weather vs. calm weather, approaching from the front vs. from behind, 1 m, 2 m, and 3 m distance between smartphone and vehicle, vehicle driving with 30 vs. 50 km/h, and smartphone being stationary vs. moving with the cyclist). Data from an 8-microphone array, video recordings, and GPS data of vehicle position and speed are provided as well. Our present dataset may prove useful in the development of mobile apps that detect a passing motorized vehicle, or for transportation research.

-

-

Auditory interface for automated driving

Bazilinskyy, P.

PhD thesis (2018)

ABSTRACT BIB